Top 6 data automation tools for 2026

.png)

Table of Contents

TL; DR

- Most data teams fail on trust, not talent. Manual pipelines silently break and undermine every decision.

- Point tools automate tasks. Platforms automate systems. The difference compounds fast at scale.

- Fragmented stacks create hidden costs in engineering time, reliability, and governance.

- Unified platforms reduce tool sprawl and make data analytics and AI initiatives viable.

- Teams consolidating onto platforms see lower TCO, faster insights, and fewer data failures.

There is a scene in Moneyball where Billy Beane looks at a room full of scouts and realizes something uncomfortable. Everyone is working hard. Everyone is experienced. And everyone is still wrong.

That moment feels familiar to many CTOs today.

Your teams are busy. Your data stack is expensive. Your dashboards exist. Yet every board meeting still starts with the same question.

“Can we trust these numbers?”

That is not a talent problem. That is not even a tooling problem. It is a data automation problem.

Most organizations still rely on manual effort disguised as pipelines. Scripts break. Schemas drift. Quality checks happen after decisions are made. By the time someone notices, the damage is already done.

Data automation tools exist to end that cycle.

In this article, we’ll explore how modern teams are ending this struggle using data automation tools, and turning their data into a competitive edge rather than a source of frustration.

The hidden toll of “fighting” your data

For many CTOs and data leaders, the daily reality is a barrage of data issues: broken dashboards, clashing KPIs, endless Slack threads asking “who owns this field?” Bad and siloed data come at a high cost. Much of that cost is hidden in wasted time and rework.

Bad data can also sabotage strategy. MIT even reported a 95% failure rate for generative AI pilots. When data is inconsistent or stale, advanced analytics and AI initiatives collapse. It’s the equivalent of trying to run a race with your shoes tied together.

All of this adds up to a sobering picture: talented people performing manual “data drudgery,” critical decisions delayed or derailed by poor data, and a mounting tab in wasted resources.

But it doesn’t have to be this way. Modern data automation tools are emerging to flip the script to get your data working for you automatically, so your team can focus on high-value work.

Stay tuned to find out which ones.

What are data automation tools, exactly?

Data automation tools refer to platforms and software that automate the collection, transformation, integration, and management of data across its lifecycle. Instead of engineers writing endless custom scripts or manually piecing together SQL queries, an automation platform handles tasks like extracting data from sources, loading it into a warehouse, cleaning and enriching it, running analyses or machine learning models, and delivering results to users – all with minimal human intervention.

In other words, the old approach was to buy a dozen different tools (the “car parts”): one for ingestion, one for the data warehouse, one for transformation, another for BI, etc. And then spend massive effort integrating them. The new approach is an end-to-end data platform that’s ready to drive off the lot, where those pieces come pre-integrated.

Also read: Friends Don’t Let Friends Build a Data Platform

Modern automation also means leveraging open-source and modular architecture. Unlike legacy “all-in-one” systems that were black boxes, today’s unified platforms are often built on open-source engines (for example, using Apache Airflow for orchestration or dbt for transformations) and offer modular components.

That means you can adopt the whole platform or swap in your preferred tool at a given layer if needed. Flexibility is key – you get the simplicity of one platform without the lock-in of a single vendor’s proprietary system.

Note:

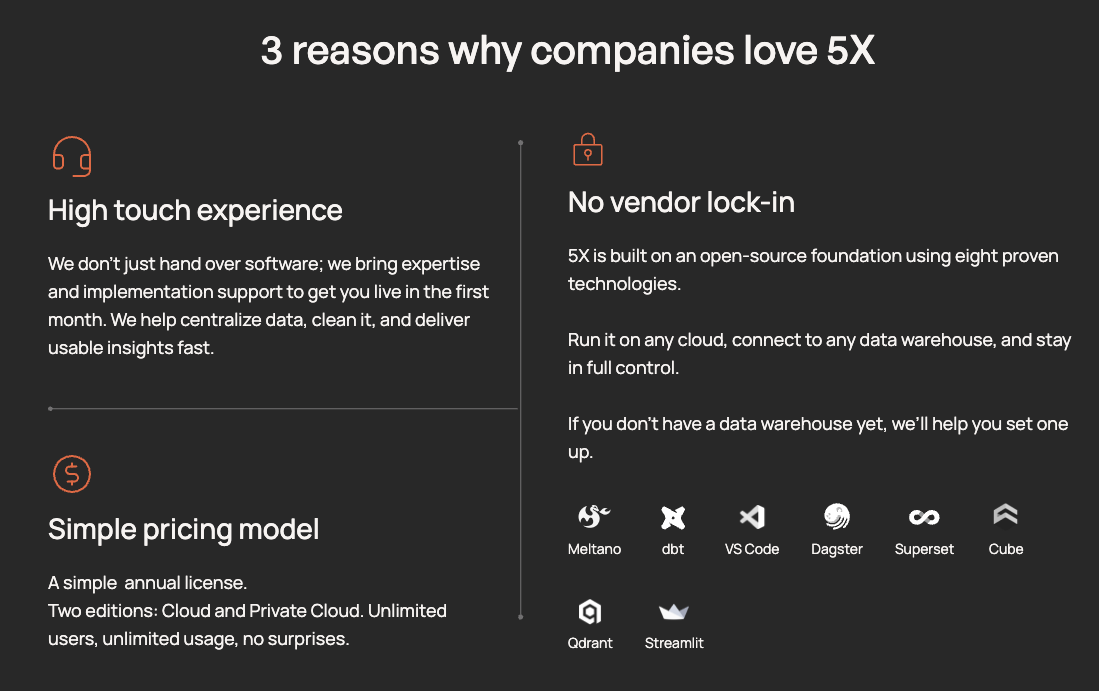

A prime example of this new breed is 5X, which is a unified data automation platform built on best-in-class open-source technologies. It replaces the usual 6–10 separate tools—Fivetran for ingestion, Snowflake for warehousing, Airflow for pipelines, Looker for BI, etc. There’s no fear of vendor lock-in; you own your data and can extend the platform as you see fit.

Consider Bank Novo, a fintech company. They consolidated their data platform and optimized their pipelines with 5X and managed to save around $350,000 annually on data platform costs.

From a management perspective, consolidation also gives you better visibility and control. It’s much easier to enforce security policies or monitor costs when everything is managed in one place.

The top data automation tools teams evaluate in 2025

Once teams accept that manual data work does not scale, the next question is predictable.

“Which tools are actually worth evaluating?”

The data automation landscape in 2025 falls into two buckets:

- point solutions that automate one part of the lifecycle extremely well

- platforms that aim to automate most or all of it

Both have a place, depending on how fragmented or consolidated you want your stack to be.

Here are six data automation tools that consistently show up in CTO evaluations, each with a clear positioning, strengths, and tradeoffs.

1. 5X: Best for end-to-end data automation on an open-source foundation

5X positions itself as a unified data automation platform rather than a single-purpose tool. Its core value proposition is simple: automate ingestion, transformation, orchestration, quality, governance, and activation inside one system instead of stitching together multiple tools.

Key features

- Automated ingestion from hundreds of sources

- Built-in transformation and modeling

- Native orchestration with monitoring and retries

- Automated data quality checks and pipeline health monitoring

- Semantic layer and BI activation

- Modular architecture built on open-source technologies

Pros

- Replaces multiple tools with one platform

- Strong governance and lineage by default

- Lower operational overhead over time

- Designed for analytics and AI readiness

Cons

- Less suitable if you only need a single narrow capability

G2 rating: 4.8 / 5

Also read: How to evaluate your data AI readiness in 5 easy steps

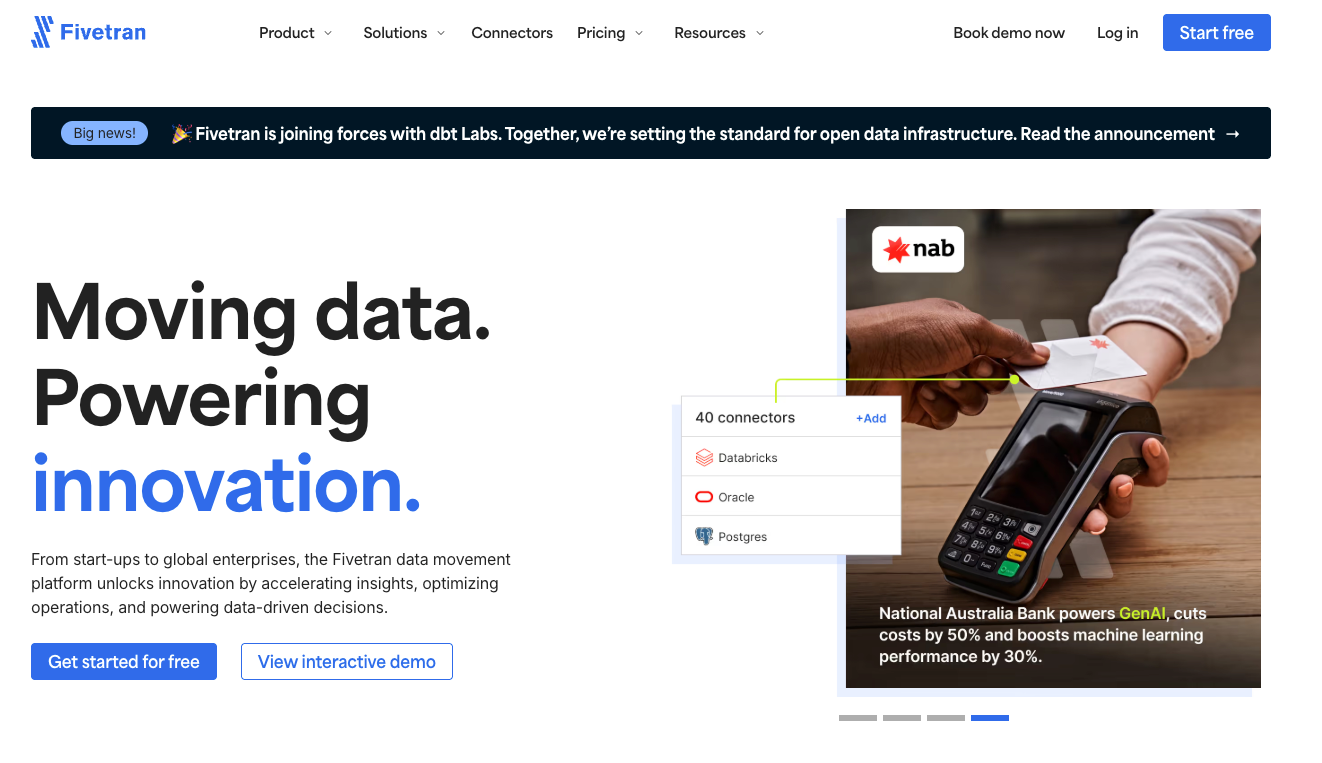

2. Fivetran: Best for automated data ingestion with minimal setup

Fivetran is one of the most widely adopted automated data processing tools for ingestion. Its primary strength is reliability and ease of use. Teams connect a source, point it to a warehouse, and let Fivetran handle schema changes and sync schedules.

Key features

- Fully managed ELT pipelines

- 300+ prebuilt connectors

- Automatic schema evolution handling

- Near real-time syncing for many sources

Pros

- Very low operational overhead

- Reliable and predictable ingestion

- Minimal engineering effort required

Cons

- Limited to ingestion only

- Costs can grow quickly with data volume

- Requires additional tools for transformation, orchestration, and quality

G2 rating: 4.2 / 5

3. Airbyte: Best for open-source ingestion flexibility

Airbyte is often evaluated alongside Fivetran but with a different philosophy. It is open source at its core and gives teams more control over connectors and deployment. This makes it attractive to engineering-led teams that want flexibility and are willing to manage more infrastructure.

Key features

- Open-source connector framework

- Large and growing connector catalog

- Self-hosted and cloud options

- Custom connector development support

Pros

- No vendor lock-in

- Highly customizable

- Strong community-driven innovation

Cons

- Requires more operational effort

- Reliability varies by connector

- Still ingestion-focused rather than end-to-end

G2 rating: 4.6 / 5

4. dbt: Best for analytics engineering and data transformation

dbt is not an ingestion tool and does not try to be one. Its role in the data automation ecosystem is to bring software engineering discipline to transformations inside the data warehouse.

It is foundational for teams that care deeply about analytics quality and version-controlled data models.

Key features

- SQL-based transformation modeling

- Dependency-aware execution

- Built-in testing and documentation

- Strong integration with modern warehouses

Pros

- Excellent for standardizing business logic

- Improves trust and consistency in analytics

- Strong ecosystem and community

Cons

- Requires separate ingestion and orchestration tools

- Not designed for end-to-end automation on its own

G2 rating: 4.5 / 5

5. Apache Airflow: Best for complex workflow orchestration

Airflow is the backbone of many mature data stacks. It excels at orchestrating complex workflows with dependencies, retries, and scheduling logic. It is powerful, flexible, and engineering-heavy.

Key features

- Python-based workflow definitions

- Rich scheduling and dependency management

- Extensive operator ecosystem

- Strong monitoring and alerting

Pros

- Highly flexible

- Ideal for complex, custom workflows

- Widely adopted and battle-tested

Cons

- Steep learning curve

- Requires infrastructure management

- Not designed for ingestion or analytics directly

G2 rating: 4.3 / 5

6. Hevo Data: Best for teams that want ingestion plus light transformation

Hevo positions itself between Fivetran and full platforms. It offers automated ingestion with some built-in transformation capabilities, targeting teams that want more than pure ELT without managing multiple tools.

Key features

- No-code ingestion pipelines

- Built-in transformations

- Near real-time syncing

- Managed infrastructure

Pros

- Faster time to value than DIY stacks

- Easier than engineering-heavy tools

- Good mid-market fit

Cons

- Limited orchestration and governance

- Less flexible than open-source options

- Still requires external BI and modeling tools

While the platform is highly efficient, the pricing can feel slightly steep for smaller teams scaling up their data operations. Advanced transformation options are powerful but could benefit from more flexibility for highly customized logic. In some cases, documentation could go deeper for complex use cases.

~ Ravi Shankar S., Full stack developer

G2 rating: 4.4 / 5

Choosing the right data automation platform: what to look for

If you’re considering embracing data automation and perhaps consolidating your stack, it’s critical to evaluate platforms wisely. Not all tools labeled “automated” or “unified” will meet your needs. Here are key factors and criteria to consider when comparing solutions:

1. End-to-end coverage

Does the platform cover the majority of your data workflow needs (ingestion, storage, transformation, analytics), or will you still need to stitch together many point solutions? A good platform should minimize the DIY glue you have to write.

2. Openness and integrations

Check if it’s built on open standards or open-source tech. This ensures you’re not locked in and can customize if needed. Also verify it has native integrations with any must-have tools your team uses. E.g. will it connect easily to Salesforce for ingest, or to Tableau for viz? Avoid systems that live in a silo.

3. Modularity

A quality platform will be modular, allowing you to use only the pieces you need and plug others into your existing ecosystem. You might not adopt it all on day one; maybe you start with automated ingestion and add other modules later. Modular design makes that feasible.

4. Scalability and performance

Make sure the platform can scale with your data volume and complexity. Automation is no good if it chokes on large datasets or high concurrency. Look for benchmarks or case studies on data size and user load, and features like elastic scaling or auto-tuning of queries.

5. Data quality and monitoring

Since one goal is to trust your data, the platform should have built-in data quality checks, error handling, and monitoring dashboards. Automated alerts for anomalies, lineage tracking, and even AI-assisted data observability are big pluses. You want a system that not only moves data, but also ensures it’s correct.

Also read: Data Trust Score: Measuring and Improving Data Reliability Across the Enterprise

4. Security and compliance

Evaluate how the platform handles security (encryption, access control) and compliance needs. Does it support role-based access and masking of sensitive data? Is there audit logging? Enterprise-grade platforms should make it easier, not harder, to meet compliance.

5. Total cost of ownership

Consider not just license cost, but the time and resources saved. Platforms that replace multiple tools can dramatically cut your overall spend. For instance, if one product can do the work of ETL, pipeline orchestration, and monitoring, that consolidates three budgets into one.

Also factor in the reduction in engineering hours spent on maintenance. As one study noted, companies with strong data automation can redirect thousands of hours from maintenance to innovation, which is an indirect cost saving.

6. Support and ecosystem

Look at the support model: do they offer strong customer support or communities? Since you’re trusting a platform, you want to know you’ll have backup if issues arise. Also, an ecosystem of partners or experts can be valuable for quick implementation or custom use cases.

Source: G2

In your evaluation, think less about checking off a list of trendy tools, and more about how a platform will fit into your environment and solve your integration headaches. How it fits together with your goals is what drives value.

Many teams find 5X attractive because it scores well on these criteria:

- open-core platform (built atop technologies like Snowflake, Airflow, and Kubernetes)

- modular in design (you can use 5X for just ingestion or go all-in for the full stack)

- focused on end-to-end automation (their platform handles everything from source connectors to BI, with monitoring at each step)

Stop fighting your data and start harnessing it

It bears repeating: your data is one of your biggest assets; it shouldn’t feel like a liability. Modern data automation tools are the key to turning the tide. They allow technical teams to reclaim their time and focus on strategic projects, and they empower business teams with timely, trusted insights. Instead of adversaries wrestling with unruly data, humans and data can finally be on the same team.

If you’re a CTO or data executive who has felt the pain of a fragmented stack or seen projects stall because the data wasn’t there when needed, now is the time to explore how a unified automation platform can change the game.

Consider this an invitation to stop fighting your data fires and start lighting data-fueled sparks of innovation. The technology has matured to a point where even complex global enterprises are running largely automated, lights-out data operations – and reaping the rewards.

5X, for instance, has helped companies across retail, SaaS, and finance slash data platform costs by 30% while increasing reliability and speed.

Are data automation tools only useful for large enterprises?

Can data automation tools replace data engineers?

How long does it take to see value from data automation?

Do data automation platforms limit flexibility compared to point tools?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

Get notified when a new article is released

Want to automate your entire data lifecycle instead of just one part of it? See how teams do it with 5X.

Want to automate your entire data lifecycle instead of just one part of it? See how teams do it with 5X.

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)