How to scale AI in your organization?

Table of Contents

TL; DR

- Scaling AI from pilot projects to enterprise-wide deployment is now a business imperative. Stuck in “pilot purgatory,” many companies fail to realize AI’s full value; only 4% of firms have achieved significant ROI from AI initiatives

- Foundation first: Unified, high-quality, governed data with strict security/PII controls, or nothing scales

- Industrialize it: MLOps (versioning, CI/CD, monitoring, retraining) + accountable governance (ownership, bias/privacy, auditability)

- Prioritize & adopt: Start with high-impact, data-ready use cases; reuse features/datasets and deliver insights inside CRM/ERP

- Outcomes & next steps: Higher revenue, lower cost, faster decisions

Scaling AI is Tetris at Level 10: if the base isn’t flat, you top out fast.

Most teams keep “dropping pieces” (POCs) onto a wobbly stack—siloed data, ad-hoc pipelines, no monitoring—then wonder why nothing makes it to production. The fix isn’t another flashy model; it’s a foundation that lets every new block snap in without breaking the board.

That base looks like this: a unified, governed data layer with shared semantics, data contracts, lineage, and least-privilege access; repeatable pipelines that don’t need a hero engineer; and MLOps with versioning, CI/CD, drift detection, and scheduled retraining.

Then you deliver outputs in CRM/ERP/support tools with clear ownership, SLAs, and auditability so risk and compliance stay intact as you scale.

This post shows you how to go from stack-teetering pilots to a system that compounds: why scaling now matters, what a secure data foundation actually includes, how to prioritize the first two high-impact use cases, and the governance and operating cadence that keep models reliable after the demo.

Why do you need to scale AI in your organization?

AI initiatives start as small pilots, perhaps a handful of data scientists build a model that shows promising results in a sandbox. But failing to scale beyond the pilot stage is costly.

Studies show that a vast majority of AI projects never make it to production: For every 33 AI proof-of-concepts, only about 4 reach broader deployment. In fact, 88% of those AI pilots don’t make the cut to wide-scale adoption.

This “pilot paralysis” means wasted investment and lost opportunity. You need to scale AI to turn those experiments into real ROI. Until AI is deployed at scale, touching real business processes and customers, it’s unlikely to deliver significant value.

Competitiveness is another big reason to scale AI. Your competitors are not standing still. Gartner predicted (back in 2022) that by the end of 2024, over 75% of organizations will shift from piloting AI to operationalizing it in the business. We are now at that inflection point—meaning if you aren’t scaling AI, you risk falling behind peers who are.

Finally, consider the risk of not scaling: If AI remains just an experiment, you miss out on data insights and process improvements that your more AI-mature competitors will capitalize on.

Most companies aren’t where they think they are when it comes to data & AI. We’ve had a few companies take the Data & AI Maturity Assessment and the results show a clear pattern: the majority are only in the Developing stage... AI adoption is still mostly in experimentation mode. This is a reality check!

~ Tarush Aggarwal, CEO of 5X

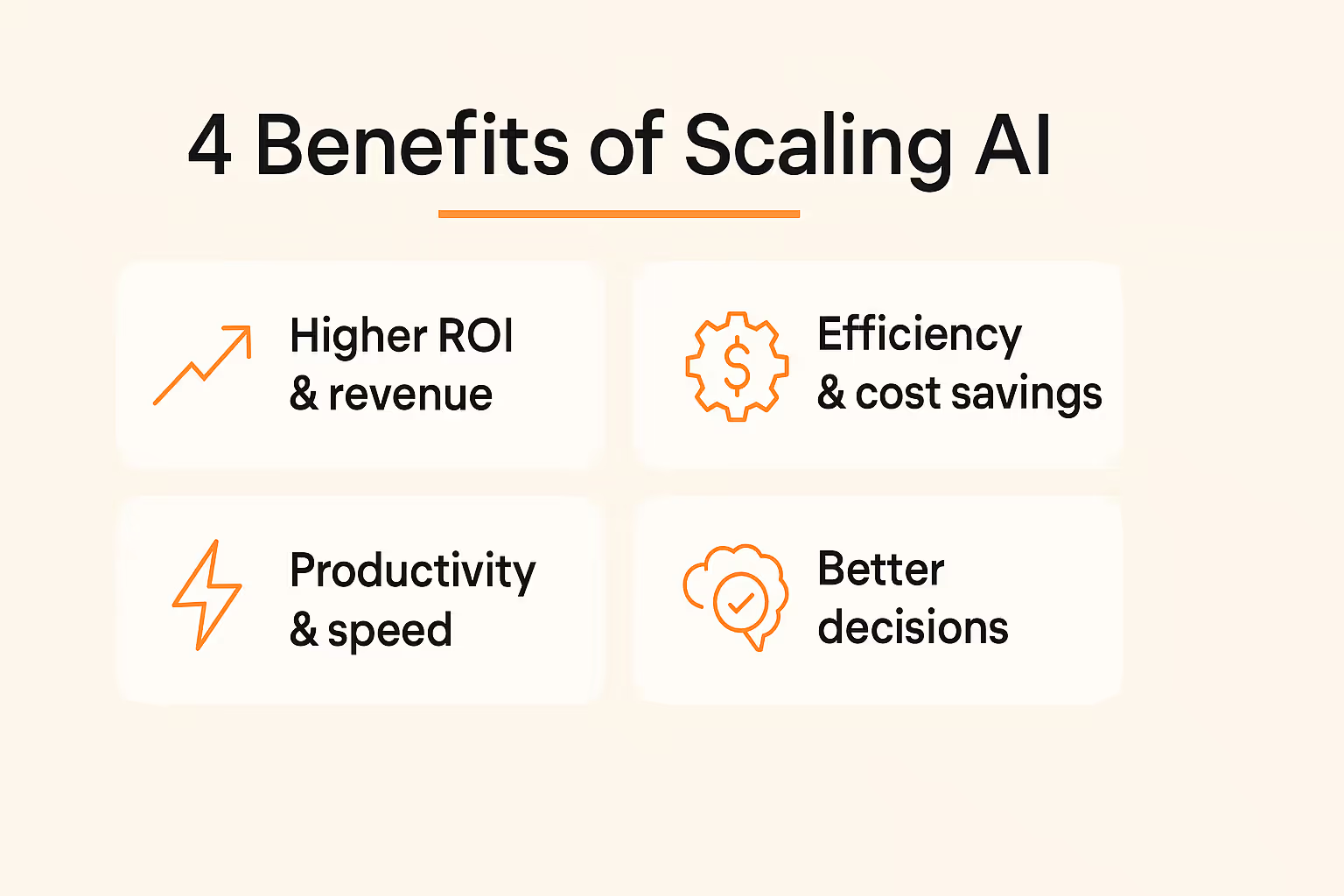

4 Benefits of scaling AI for your business

When you scale AI beyond pilots, you position your business to reap tangible, enterprise-level benefits. Here are some key advantages companies see when they successfully scale AI across the organization:

1. Higher ROI and revenue growth

Scaled AI initiatives can directly drive top-line growth. By embedding AI into products and services, companies can create more personalized offerings, enter new markets, and improve customer acquisition/retention.

Early AI-at-scale adopters have reported revenue increases on the order of ~10–20% thanks to better analytics and smarter decision-making.

AI can help identify sales opportunities, optimize pricing, and even create new revenue streams (for example, by productizing AI-driven insights). In an increasingly data-driven economy, those who operationalize AI can outperform those who rely on intuition or legacy processes.

2. Efficiency and cost savings

One of the most immediate benefits of scaling AI is operational efficiency. AI and machine learning can automate routine tasks, streamline workflows, and reduce errors, leading to significant cost savings.

Examples abound: AI systems can optimize supply chains (reducing waste and inventory costs), automate customer support (cutting service costs), and improve equipment maintenance via predictive analytics (preventing costly downtime).

3. Productivity and speed

AI at scale empowers teams to work smarter and faster. By automating data processing and augmenting human workers with AI insights, companies can dramatically increase productivity.

Consider tasks like generating reports, analyzing trends, or handling transactions. AI can do the heavy lifting in seconds, allowing your staff to focus on higher-value work. Scaled AI also accelerates time-to-insight: organizations can go from data to decision in real time. This speed is a competitive advantage in itself, letting you respond to market changes or customer needs more rapidly than others.

4. Better decision-making (data-driven culture)

When AI is widespread, data-driven decision-making becomes the norm. Instead of decisions based on gut feel, teams have AI analytics and predictions guiding them.

Scaled AI provides leaders a comprehensive view of the business in real-time dashboards, predictive models for forecasting, and even prescriptive suggestions for optimal actions. The result is better decisions at all levels: strategic and operational.

One thing that I'm proud of is our attribution model. You need to understand what's working and what's not, so you can fine-tune the top of the funnel engine. And attribution is how we do that. Using attribution models, we make capital allocation decisions and ingest all touch points across all channels and campaigns with our customers.

~ Kiriti Manne, Head of Strategy & Data, Samsara

How Samsara’s Attribution Model Turns Data into Gold

For example, AI at scale can improve forecasting accuracy in finance, identify fraud patterns in real-time for risk management, or suggest product improvements from customer feedback analysis. Over time, this builds a culture where metrics and AI insights inform every key decision, reducing risk and bias.

Also read: Driving Data-Driven Culture: Advice From Data Leaders at Freshworks, Samsara, and others

If you can just ask the [Gen AI] model, 'Hey, why did this account churn? It had great usage,' or 'Hey, why was this account retained? It had horrible usage,' you can quickly get to that level of generating new hypotheses, getting into the data, and making it work. And that’s when you will start to see AI and your company come together.

~ Michael Tambe, Sr. Director, Data & Analytics, Gong

Improving Gong’s customer retention with predictive data analytics

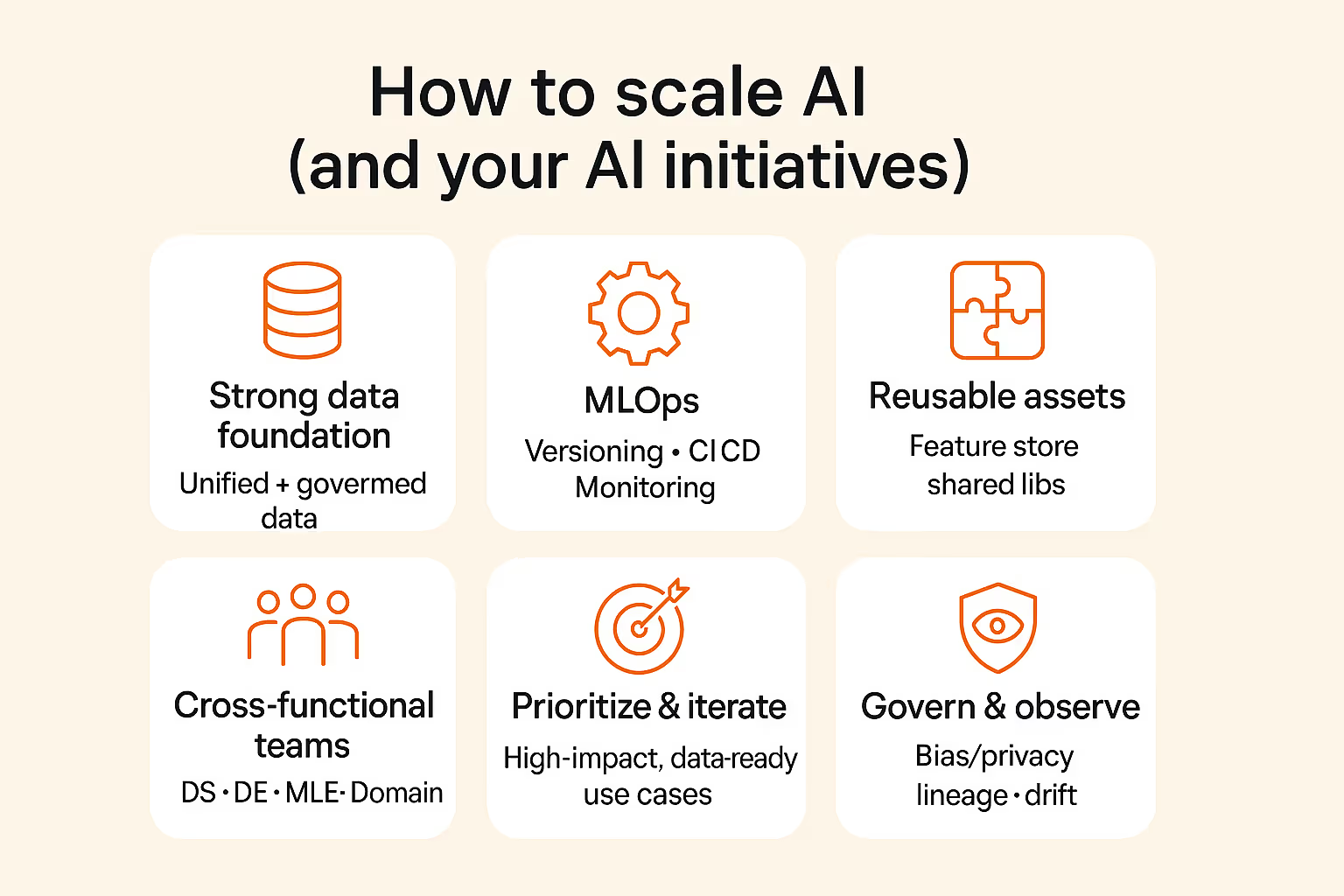

How do you scale an AI model (and your AI initiatives)?

Successfully scaling an AI model from pilot to production and scaling up the number of AI models in use requires a deliberate strategy and the right foundation.

It’s not as simple as deploying your one-off model on a bigger server. You must industrialize your AI development and deployment process so it can reliably support many models, large datasets, and multiple teams.

1. Build a STRONG DATA FOUNDATION (modern data platform)

Data readiness is step zero.

Better AI isn't about more data; it is about the quality of data and its connectivity. We have assigned accountability to make sure that we just don't keep on saying the quality is bad, but keep improving it.

~ Anindita Misra, Global Director of Knowledge Activation & Trust, Decathlon Digital

How Decathlon uses data to optimize in-store operations

Ensure you have a centralized, high-quality data platform that can handle large, diverse data at speed. So invest in cleaning and integrating your data sources.

Adopt a modern data platform (like a cloud data warehouse or lakehouse) that unifies siloed data and provides governed, secure access.

Without a robust data infrastructure, your AI models will starve or stumble. With it, you’ll feed your AI efforts with reliable data pipelines, enabling consistent scaling.

Also read: Unlocking Data Potential: Top 6 Tools for Data Readiness

2. Establish MLOps and reusable tools

Treat AI scaling as an engineering discipline. Implement MLOps (Machine Learning Operations) practices to streamline how models are developed, tested, deployed, and monitored. This includes setting up automated CI/CD pipelines for model code, version controlling datasets and models, and continuous monitoring of model performance in production.

Reusable assets are crucial too; for example, create a feature store (a centralized repository of machine learning features) to avoid redundant work and ensure consistency across models. Similarly, develop common libraries or modules (code assets) that teams can reuse so you’re not reinventing the wheel for every project.

3. Invest in cross-functional teams and skills

Create mixed teams that include data scientists, data engineers, software engineers, domain experts, and business stakeholders. These teams ensure that models address real business needs and can be integrated into workflows.

Engage stakeholders from various departments early (finance, ops, marketing, etc.) so that AI solutions are designed with end-user requirements in mind. You’ll likely need to upskill or hire to fill certain roles (for example, MLOps engineers or data platform specialists).

Organizationally, many companies form an AI Center of Excellence or similar, which sets best practices and helps different units adopt AI. This means training employees to work with AI (data literacy), establishing trust in AI systems, and ensuring leadership buy-in.

4. Start with impactful use cases and iterate

Not every AI pilot should be scaled, and not all at once. Prioritize AI projects with clear business value and high success potential. For example, automating a well-defined process or enhancing a customer-facing function that has clear KPIs.

Success, first and foremost, doesn’t mean perfect data. Nobody has perfect data. But it means that you are confident in your action… if the model gives you insights that you trust… then I think you’re ready to go into production.

~ Gaia Camillieri, Managing Director, MISSION+

How to get your data ready for AI

Early wins build momentum and executive confidence. At the same time, tie each AI initiative to specific, measurable outcomes. So, define what success looks like (e.g. reduce processing time by 50%, or improve forecast accuracy by 20%).

Iterate gradually: scale one use case, learn from it, then expand to the next. This iterative scaling ensures you incorporate lessons (maybe you discovered a need for better data labeling or a new governance checkpoint) before tackling bigger, more complex AI deployments.

5. Manage the full AI lifecycle (governance and monitoring)

When scaling AI, you need robust governance to keep models performing well and prevent chaos. Establish processes to continuously monitor model performance, data drifts, and business metrics.

Also read: Best Data Governance Tools

Over time, models can decay (accuracy drops as data changes). A scaled AI program will have procedures to retrain or retire models proactively. AI governance also means ensuring compliance and ethical standards are met across all AI applications.

Bake in accountability: who approves models before they go live? How do you document model assumptions and data lineage? Leading firms integrate governance tools and reportability from the outset so that as they deploy dozens of models, they maintain transparency and control.

Additionally, consider setting up a centralized model registry and audit trails for all AI models in production. This way, when auditors or executives come knocking, you can demonstrate what each model is doing, what data it uses, and how decisions are made.

6. Foster a culture of experimentation (with discipline)

At scale, AI success depends on balancing innovation with process. Encourage teams to continuously experiment with new AI use cases (e.g. encourage hackathons, pilot projects on new data sets, etc.), because scaling isn’t a one-and-done, it’s an ongoing journey of expanding AI’s frontier in your business.

However, do so in a governed way: use sandbox environments where ideas can be tested safely, and have a pipeline for promoting the promising ones to production.

Document and share learnings from each experiment across the organization so that knowledge compounds. A culture that celebrates data and AI, backed by a clear strategy and strong data platform, will naturally drive scaling further.

What are the challenges in scaling AI?

Scaling AI is rewarding, but it’s far from easy. In fact, the road from a successful AI pilot to a fully deployed enterprise solution is often where the real challenges begin. Understanding these hurdles can help you proactively address them.

1. Data quality and silos

Garbage in, garbage out” is magnified at scale.

Many AI pilots use cleaned, small datasets; but enterprise AI must handle messy, real-world data across the organization. Poor data quality is consistently cited as a top barrier to AI deployment. Incomplete, inconsistent, or siloed data can cause models to perform poorly or even fail when scaled. Additionally, data might be spread across departments in incompatible systems.

Mitigation

Invest in data engineering and data governance. Before scaling a model, ensure you have pipelines to continuously feed it high-quality, up-to-date data. Implement a master data management strategy to break silos. For example, centralize data in a cloud data warehouse or lake accessible to all AI applications.

2. Talent and expertise gaps

A small pilot might succeed with a few star data scientists, but scaling AI demands a broader base of skills, including data engineering, ML Ops, cloud architecture, and domain knowledge to integrate AI into business processes. Many organizations lack sufficient in-house AI expertise to support multiple projects.

Mitigation

Upskill your workforce and/or hire strategically. Consider training programs to turn analysts into “citizen data scientists” and to familiarize engineers with ML tools. Bringing in external partners or consultants can jump-start scaling efforts (e.g., having experts set up your MLOps framework or data platform). Another approach is adopting AI platforms that abstract some complexity. For instance, using AutoML or no-code AI tools for simpler use cases, so domain experts can build models under guidance.

3. Unclear objectives and alignment

One big reason pilots don’t scale is a lack of clear business objectives. If an AI project was pursued because it was “cool tech” rather than tied to a business problem, it often stalls out. Leaders may then hesitate to fund scaling something with no obvious ROI.

Mitigation

Start with a strategy. Ensure every AI initiative has a well-defined success metric linked to business value (e.g., increase conversion by X%, reduce churn by Y%). Communicate this clearly to all stakeholders. It’s easier to get buy-in to scale an AI solution when, say, the sales VP sees it directly contributes to hitting sales targets.

4. Integration and operationalization issues

An AI model in a Jupyter notebook is one thing; integrating it into production systems and workflows is another beast. Companies hit challenges connecting new AI systems with legacy IT infrastructure or software.

For example, your pilot churn model might work in a lab environment, but can it integrate with the live customer database and CRM system to actually trigger retention actions? Also, scalability of infrastructure is a concern. Can your systems handle the compute load if the model needs to score millions of records or low-latency predictions?

Mitigation

Work closely with IT and software teams from the get-go. Plan the deployment architecture while building the model (don’t treat it as an afterthought). Use robust APIs or middleware to link AI into existing applications. Sometimes the solution is adopting new cloud infrastructure for AI inference at scale (e.g., using Kubernetes or serverless functions to serve models).

5. Governance, risk, and compliance

The more widely AI is used, the more it comes under scrutiny from regulators, customers, and executives. A challenge is ensuring every team’s AI project adheres to company policies and ethical guidelines. Without oversight, one rogue AI application could cause legal trouble or brand damage (imagine an AI that unintentionally discriminates in lending decisions, for example).

Mitigation:

Implement a robust AI governance framework. This might include an AI ethics board or review committee for high-impact use cases, bias audits for models (especially those affecting customers or employees), and strict data governance (to ensure privacy and compliance with regulations like GDPR). Incorporate security best practices: control access to AI models and sensitive data, and guard against adversarial attacks on your models.

The era of isolated AI experiments is over

To unlock AI’s true value, you need to weave it into the fabric of your enterprise. By now, you should have a solid understanding that successful AI scaling requires equal parts technology (data platforms, MLOps, tools) and strategy (clear goals, cross-functional teamwork, governance).

The path is challenging but achievable. Many organizations have gone from AI novices to AI leaders in just a few years by following these best practices. They started with strong data foundations, nurtured the right talent, focused on high-impact use cases, and used modern platforms to accelerate their journey.

As you plan your next steps, here are a few parting pieces of advice:

- Ensure executive sponsorship and organizational alignment: C-level buy-in is often the deciding factor that empowers scaling efforts with the necessary resources and urgency

- Measure and celebrate early wins: When you demonstrate quick wins (with hard numbers to back them up), it builds momentum and buy-in for further scaling

- Stay adaptable: The AI field evolves quickly. New tools, model types, and best practices are emerging constantly. Be ready to adopt innovations that can aid your scaling journey, whether that’s a breakthrough algorithm or a more efficient infrastructure solution

Finally, remember that you don’t have to go it alone. 5X exists to make scaling data and AI easier. It is a secure, end-to-end data platform built on an open-source foundation, with zero vendor lock-in, exactly the kind of flexible yet powerful backbone you want when scaling AI across clouds and systems.

FAQs

How do you measure AI success in your organization?

How can you scale AI for your business (practically)?

What “secure data foundation” do we actually need before scaling?

Which AI use cases should we scale first?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

Get notified when a new article is released

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)