Top 8 Data Orchestration Tools in 2026 [Expert-reviewed]

Table of Contents

TL; DR

Business success hinges on understanding data to make smart decisions. According to Gartner, 87% of businesses have low BI and Analytics maturity. Scattered and siloed data adds to this complexity, making getting a 360-degree view of operations difficult. This is where data orchestration comes into play.

Data pipeline orchestration software helps businesses unify disparate data sources, extract valuable insights, and drive growth.

If you’re just starting your journey with orchestration, this guide is for you. We’ll get into what exactly data orchestration is, why it is crucial, and the most popular data orchestration tools in 2026.

What is data orchestration in data engineering?

Data orchestration solutions manages the flow of data from various sources to a destination where it can be analyzed and used for decision-making.

Steps involved in data orchestration

- Transforming data: Cleaning and organizing data in a useful format

- Integrating data: Consolidating data collected from disparate sources to get a complete picture

- Automating workflows: Automating the steps of collecting, transforming, and integrating data so you don’t have to do it each time

5 Benefits of using data orchestration tools

#1 Make decisions faster

Automated workflows and real time processing of data give quicker insights which in turn helps in faster decision making. Exploring the best ways to visualize data further enhances the interpretation of these insights. For instance, retailers can optimize inventory, marketing, and supply chain by integrating real-time sales, inventory, and customer feedback data.

#2 Achieve better data quality

By automating the cleaning, transforming, and organizing process, the tools minimize data errors and discrepancies and provide a single source.

#3 Create a single source of truth

Data orchestration removes data silos and inconsistencies. This creates a reliable, centralized data repository for all departments and a foundation for better data management.

For example, Financial institutions can consolidate loan, credit card, and customer data into a central repository for enhanced risk assessment and customer service.

#4 Seamlessly manage data scalability

Big data orchestration pipeline tools are built to scale as your business grows. With increased data volume, these tools can efficiently manage the data influx, preventing system slowdowns and maintaining data quality. And all this without any manual intervention.

#5 Reduce operational costs

Data workflow orchestration tools automates data-related tasks, such as data integration and transformation that significantly reduce labor costs. Additionally, improved data quality can lead to fewer errors and rework, further lowering operational expenses.

Top 8 data orchestration tools

1. 5X Modeling and Orchestration

The 5X orchestrator lets you build and deploy scheduled complex data pipelines (DAGs) to production. You can design, maintain and optimize data orchestration pipelines for your production environment using scheduled jobs based on triggers to deploy to production.

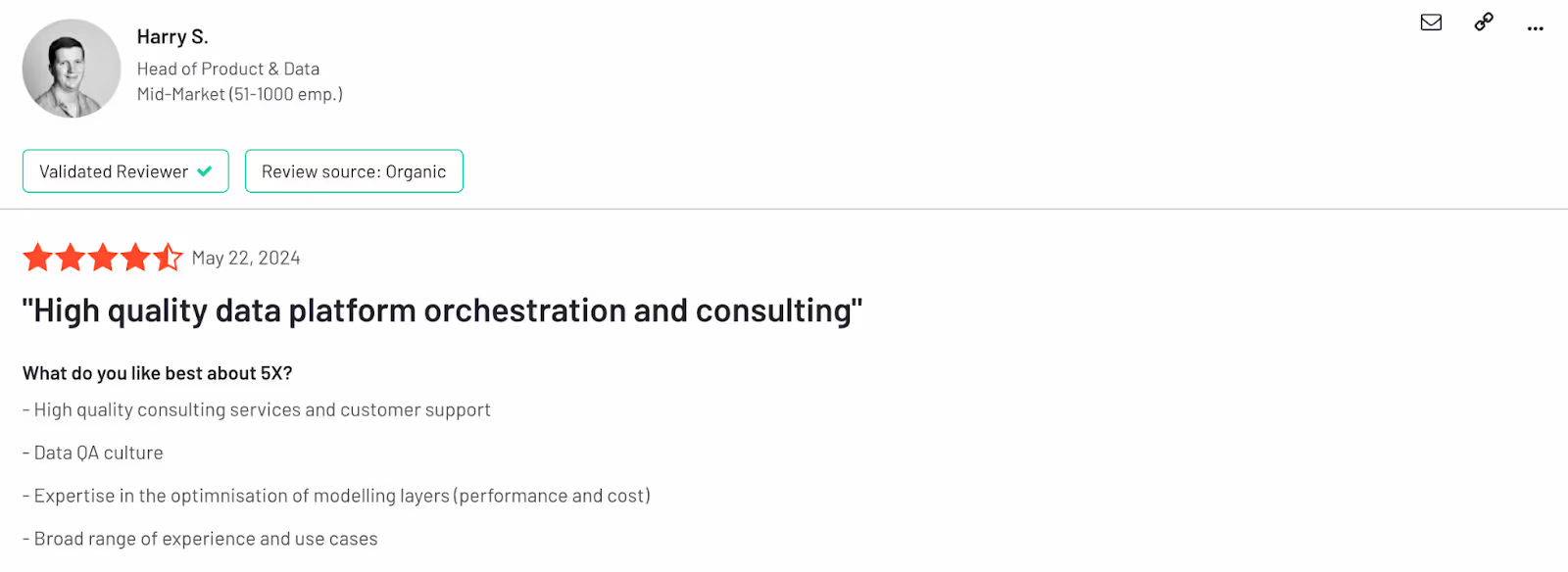

Why users choose 5X for data orchestration

- Unified dev experience: A unified interface to build end-to-end data pipelines - ingest, model, & orchestrate, & analyze. SQL, Python.

- Boost productivity: Frictionless end-to-end development workflow for data teams. Easily build, test, deploy, run, and iterate data pipelines

- Flexibility: 5X gives you flexibility to create DAGs via UI or code, allowing you to choose the method that best fits your workflow

- Pre-built templates: Accelerate development time with 5X’s pre-built templates and boost productivity

- Enhanced data reliability: Be confident in the data your team delivers and rest assured that it’s trusted, accurate, and timely for your stakeholders

Pros

- Easy to use platform: User-friendly and intuitive, allowing users to manage data tasks efficiently.

- Centralized management: Offers a unified platform for various data-related needs, eliminating the need to switch between multiple tools.

- Cost-effective: 5X offers pre-negotiated vendor rates and warehouse optimization

- Expert consulting: 5X offers consulting services with knowledgeable data professionals who can help with data strategy, implementation, and best practices.

- Responsive support: Get expert support for all your data needs.

Cons

- Relatively newer tool: 5X

- Learning curve: Might be a learning curve for new users

Pricing

- 5X offers you a pay-as-you-go approach with no up-front costs. Only pay for the products you use. Learn more about 5X’s pricing here.

G2 rating: 4.8 out of 5

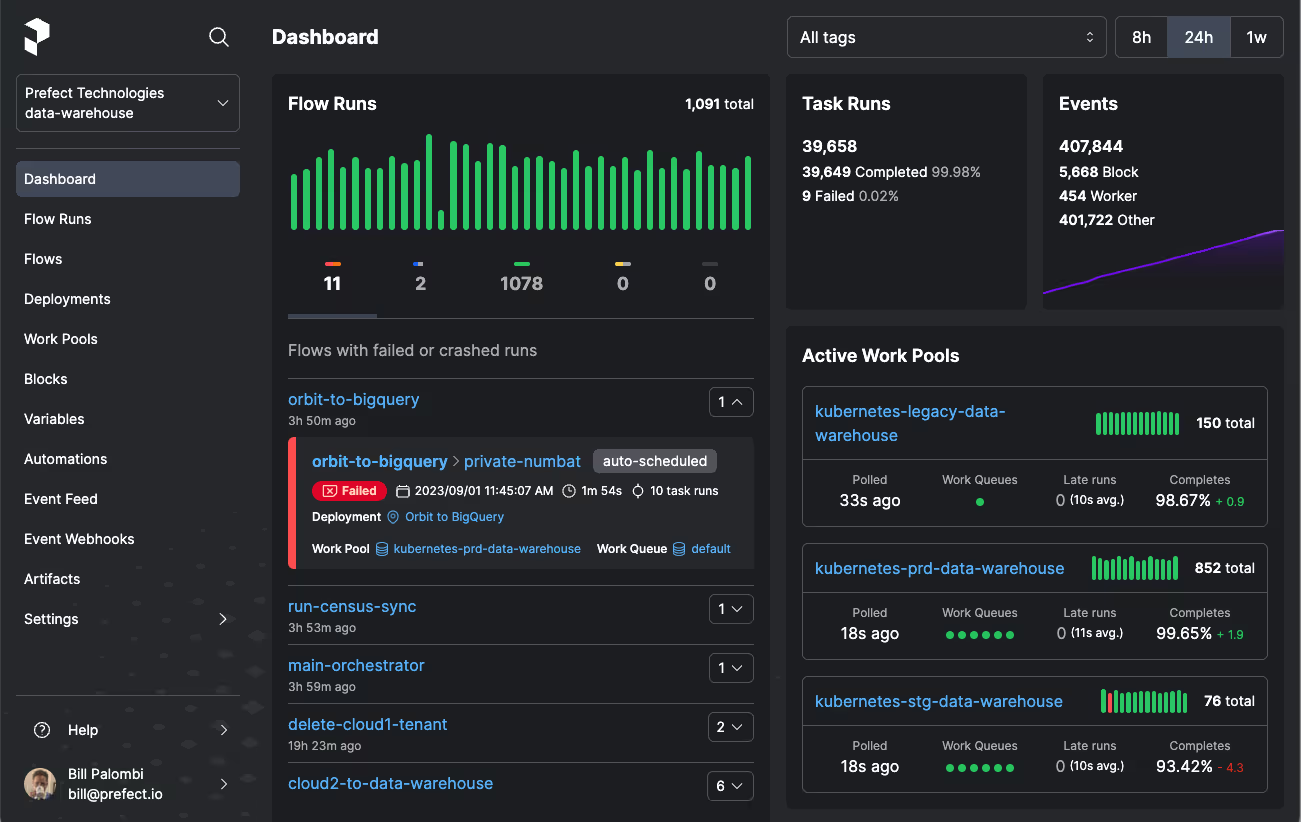

2. Prefect

Prefect is an open-source data orchestration tool that helps build resilient data pipelines in Python. It enables the creation of robust data pipelines that can dynamically adjust to their environment and recover from unexpected changes.

Why users like Prefect

- Schedules flow automatically for timely execution

- Freedom to either self-host an open-source

- Orchestrate workflows with a single Prefect decorator

- Sends alerts in various messaging services like Slack, Discord, email, and Teams.

- Single command to set up orchestration server

Pros

- Build complex workflows with ease

- Supports dynamic scheduling and reactive workflows

- Provides comprehensive monitoring and logging capabilities

- Seamlessly connects with various data platforms and tools

- Free core version with a scalable, paid cloud service

Cons

- Learning curve for new users

- Poor documentation with a lack of examples

- acks native support for certain niches.

- Managing infrastructure can be complex without Prefect Cloud

Pricing

- Free: All core features

- Pro: $1,850/mo, self-serve plan for startups and small teams

- Enterprise: Contact sales, advanced features with admin controls

G2 rating: 4.5 out of 5

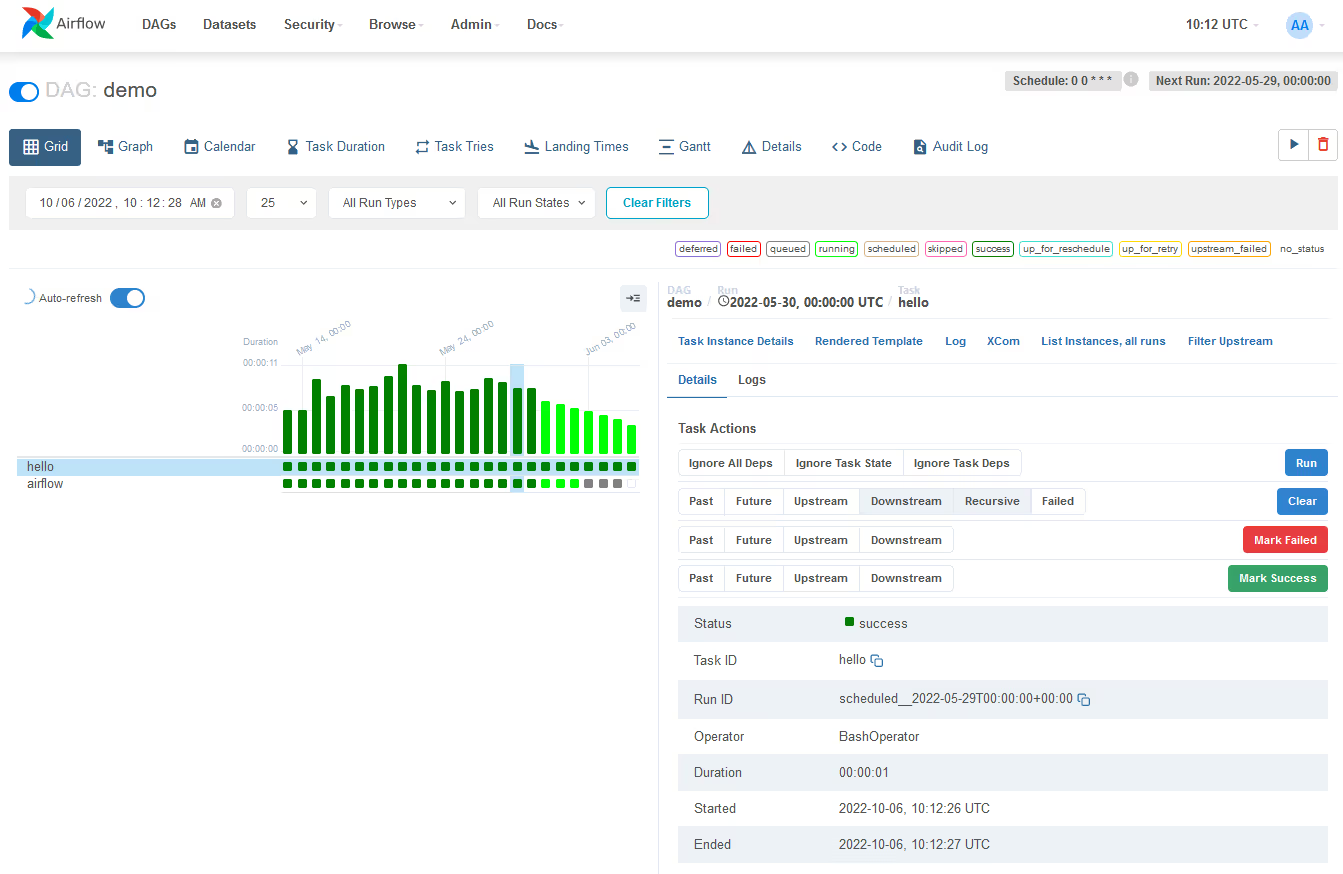

3. Apache Airflow

Apache Airflow is an open-source Python-based orchestrator tool designed for developing, scheduling, and monitoring batch-oriented workflows. The Python framework enables you to build workflows connecting with virtually any technology.

Why users choose Apache Airflow:

- Creates and manages datasets automatically

- Dynamic task mapping

- Event-driven workflows

- Deferrable operators

- Scheduler helps run more data pipelines concurrently

Pros

- Supports a wide range of integrations

- Easily scalable and best for handling complex and large workflows efficiently.

- The active community provides ample support

- The platform is highly customizable

- Easy to set up

Cons

- UI can be clunky

- Troubleshooting errors and debugging can be cumbersome

- Limited set of built-in features

- It is not non-technical user friendly

Pricing

- Apache Airflow itself is completely free to use. There are no licensing fees associated with it. However, the overall cost of running Airflow in a production environment depends on several factors like infrastructure, storage, database, etc. Here are

G2 rating 4.3 out of 5

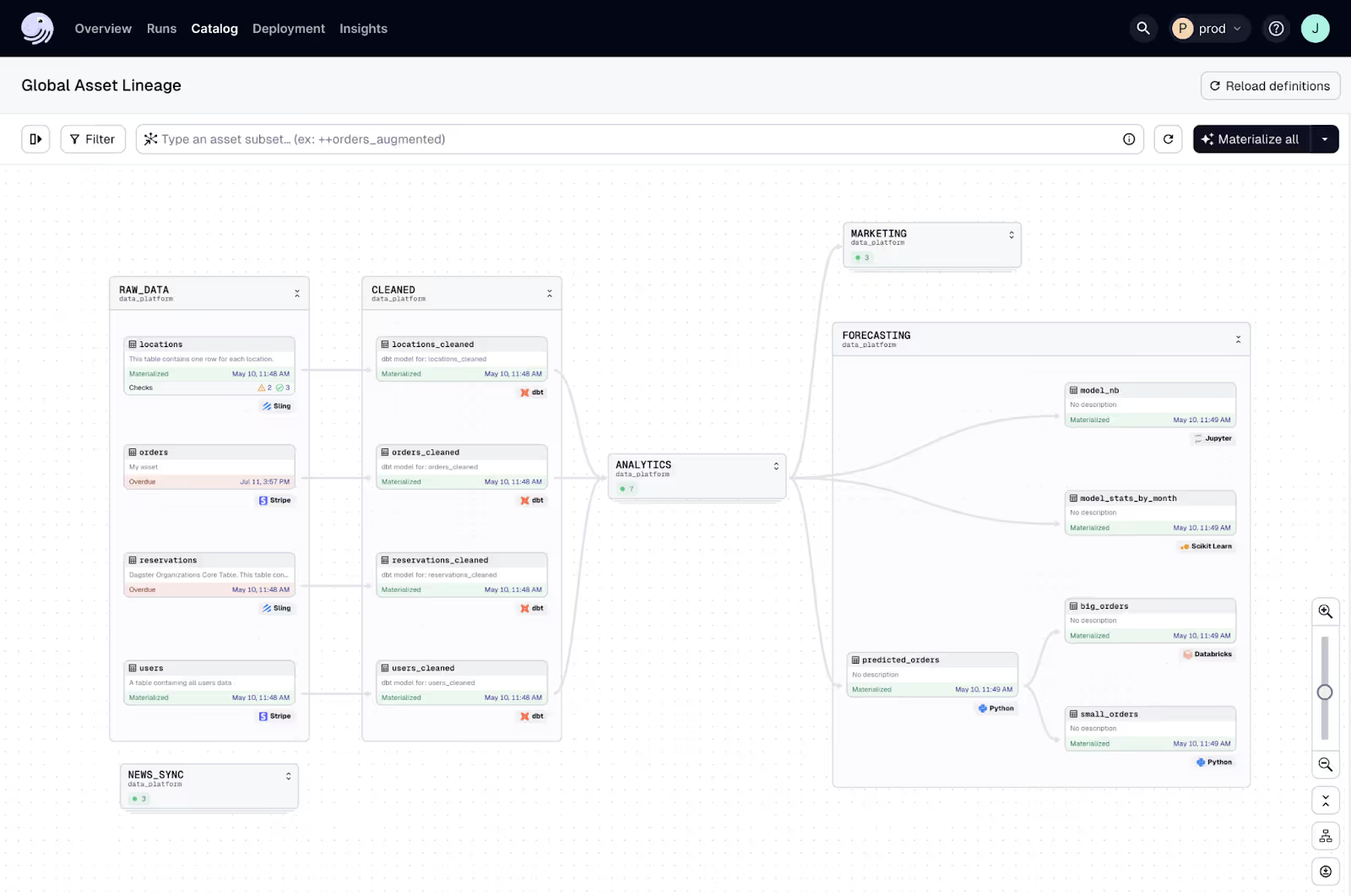

4. Dagster

Dagster is an orchestrator that's designed for developing and maintaining data assets. Dagster’s asset-centric approach makes it easy to understand and manage data pipelines by clearly showing how assets like tables and reports are produced. It also simplifies debugging and quality checks, as you can easily track asset status, diagnose issues, and apply best practices uniformly across teams.

Why users choose Dagster

- Asset-centric approach with clear definitions and dependencies

- Breaks down pipelines into reusable components

- Automate pipeline execution based on time-based schedules or external events

- Integrate with tools like dbt, Spark, and Pandas

Pros

- Dagster provides a straightforward and user-friendly interface

- The Dagster UI is noted for its top-notch quality

- Detailed documentation

Cons

- The pricing model, based on serverless computing and Dagster credits, can be confusing

Pricing

- Solo: $10 per month, offers 5,000 Dagster Credits and one developer seat

- Team: $100 per month, caters to small- and medium-sized teams, 20,000 Dagster Credits and three developer seats

- Pro: contact sales, customizable credits, and users

Reddit review

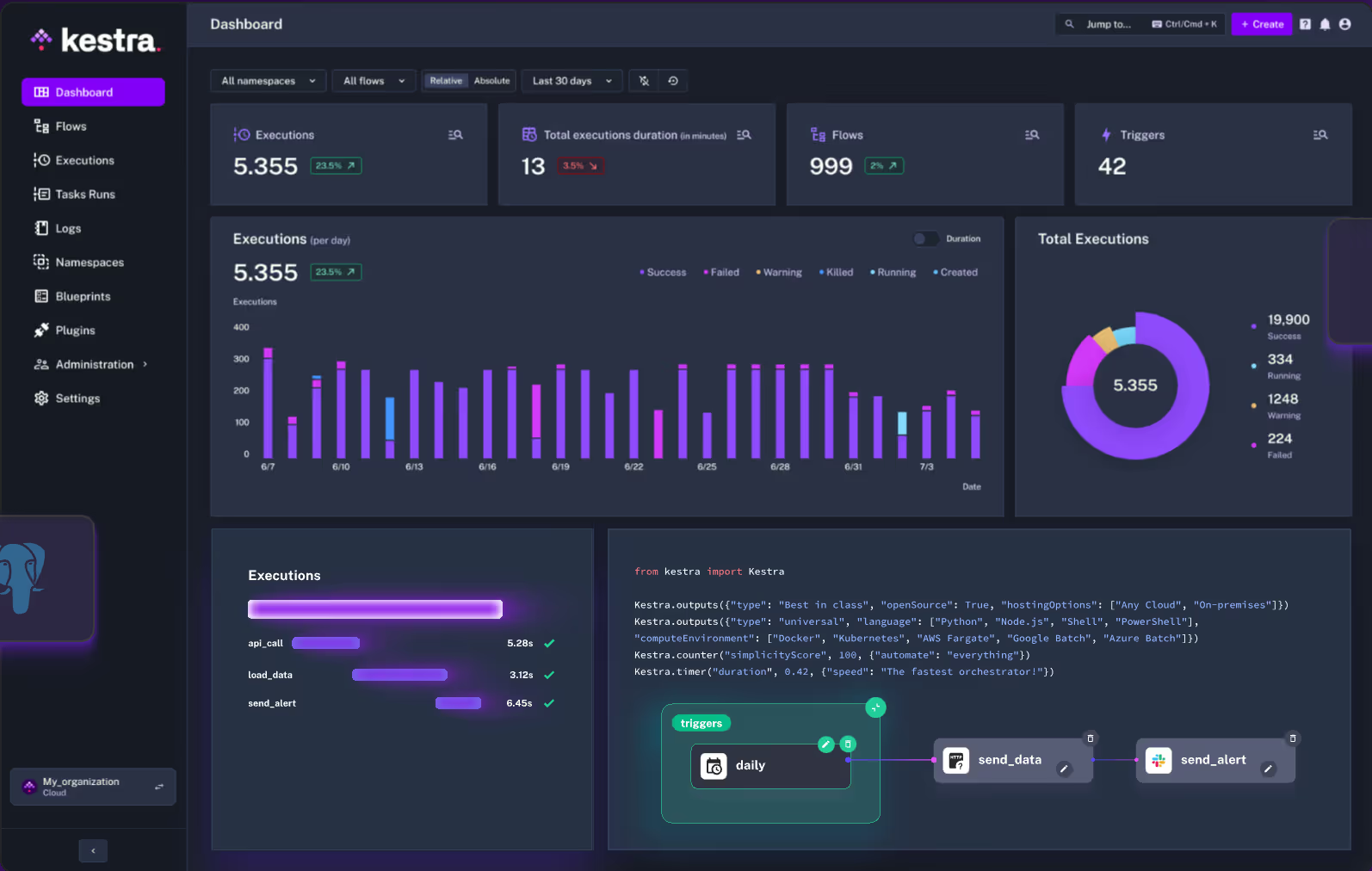

5. Kestra

Kestra is an open-source Data orchestration software that enables engineers to manage critical workflows through code.It simplifies data pipeline creation with a library of pre-built components, a built-in code editor, and Git and Terraform integration.

Why users like Kestra

- Create complex workflows with ease using our intuitive interface and code editor

- Comprehensive plugin ecosystem

- Advanced flow and task monitoring with metrics, logs, and notifications

- Highly extensible and customizable architecture

Pros

- YAML Interface allows non-technical users to create data workflow

- User-Friendly UI

- Supports various cloud providers and tools with plugin support

- Supports API and event-driven workflows

- Built-in REST API

Cons

- Pipelines are not written in Python

- Embedding Python in YAML files can be cumbersome

- Documentation lacks example

Pricing

Kestra is free to use. However, there are additional costs for hosting, infrastructure, and any third-party services.

6. Mage

Mage is an open-source platform for building and managing data pipelines using Python, SQL, and R, supporting both batch and real-time processing. Data pipeline orchestration tools helps manage and automate the flow of data between different systems.

Why users like Mage

- Schedule and manage data pipelines with observability

- Interactive Python, SQL, & R editor for coding data pipelines

- Real-time ingestion

- Build, run, and manage your dbt models

Pros

- User friendly with easy setup

- Supports multiple languages including Python, SQL, and R

- Collaborative platform

- Offers a free, self-hosted option

Cons

- Limited documentation and resources compared to more established tools

- Limited community support compared to mature tools

Pricing

- Mage is free as long as you are self-hosted

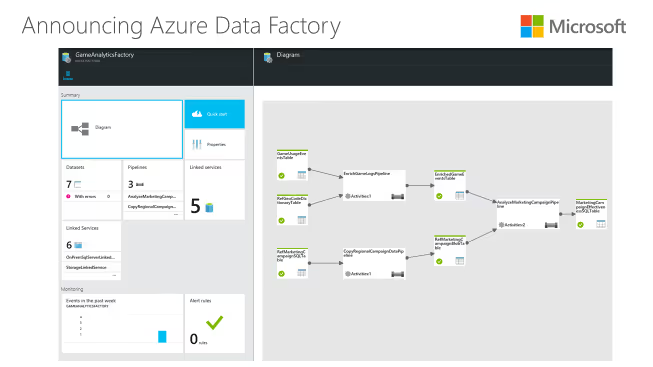

7. Azure Data Factory

Azure Data Factory (ADF) is a cloud-based data integration service that allows you to create data-driven workflows to move data between various on-premises and cloud-based data stores.

Why users like Azure Data Factory (ADF)

- Provides broad connectivity support for connecting to different data sources

- Automates data processing using custom event triggers

- Compresses data during copy to optimize bandwidth

- Create customizable data flows

- Role-based access control

Pros

- Seamless Data Integration and supports variety of data sources

- Supports both on-premises and cloud-based data sources, offering flexibility.

- Cloud-based solution can potentially reduce infrastructure costs

- Built-in data transformation capabilities

Cons

- Costs can escalate for large-scale data pipelines,

- Might not be optimal for real-time data processing

- Requires time and effort to master the platform

Pricing

- Azure Data Factory is a pay-as-you-go service

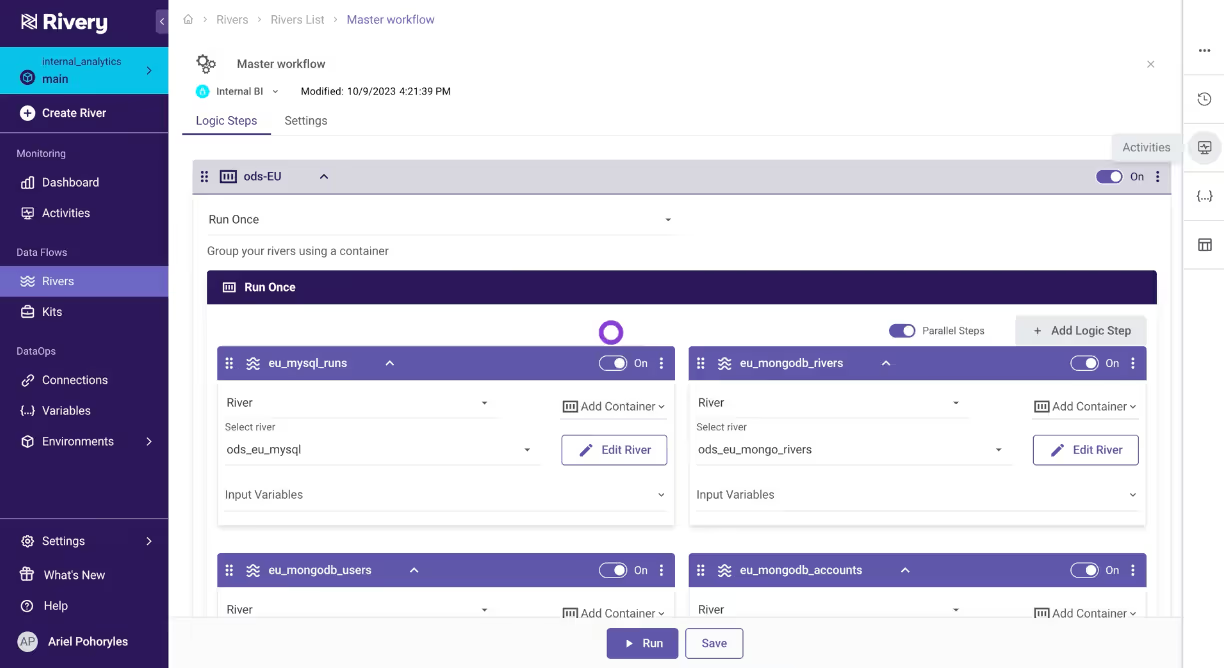

8. Rivery

Rivery is a cloud-based data orchestration platform that simplifies the complex process of building and managing data pipelines without the need for extensive coding or infrastructure management.

Why users like Rivery

- Accelerate your workflow development via no-code workflow open-source orchestration software

- Serverless data pipelines

- Reduce dev time with unified workflow templates

- Chain pipelines together and stop complex time-based scheduling

Pros

- Offers features like conditional logic, loops, and Python scripting

- Pre-built templates, drag-and-drop functionality, and a no-code approach

- User-friendly interface

Cons

- Limited customization options

- Pricing can become substantial for large-scale data operations.

- Steeper learning curve for complex use cases.

Pricing

Rivery pricing is consumption-based. Consumption is calculated in credits, or Rivery Pricing Units (RPUs)

- Starter: $0.75 Per RPU credit, for small data and BI teams

- Professional: $1.20 Per RPU credit, for advanced data teams

- Enterprise: custom plan

On your way to finding the best data orchestration tool

Picking the right orchestration can be a challenging task, but it doesn’t have to be. With 5X you can empower your business by getting a unified platform for data ingestion, modeling, orchestration, and analysis, and reduce your data platform costs significantly. You can integrate whichever tools you like to work with and only pay for the 5X tools that you need.

FAQs

How to choose the right data orchestration tool?

Why is data orchestration important?

What is data orchestration vs ETL?

What is the state of the data orchestration market?

What's the best Orchestration tool for Snowflake?

Which data orchestration solution is best for enterprise?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)