Data Warehouse Buyer’s Guide

320 business hours!

That’s how long a junior to mid-level data engineer typically spends on researching, understanding requirements, shortlisting, getting a quote, comparing & evaluating, procuring, and implementing a data warehouse. Simply put, a whopping two months. But here’s the scoop: it can be done much quicker if done right.

Choosing the right data warehouse isn’t just about having a place for your data; it's about having the right place. With countless options, the selection process can be challenging. Each tool has its strengths and weaknesses, making it crucial to find one that aligns with your business needs.

You can find the right warehouse in three ways, two of which are arduous:

Browse the web, read reviews, and test the best-rated (often manipulated) tools, which may take months and bring uncertainty about the best fit.

Seek help from communities like Reddit, only to get unspecific answers, which doesn't help the cause.

Use this guide as the single source of truth with insights & tips from our in-house data experts.

See how 5X helps companies pick the best-fit data warehouse.

First things first, what is a data warehouse?

I came across this 👇 question on Stack Overflow while researching data warehouses:

I was asked by a customer what the term "data warehouse" really means.

I thought about ETL, details of the data model, differences to NoSQL, Clouds, 'normal' DBMS, MDM (Master Data Management) etc. but wasn't able to describe the term in a few words to him... (In fact I did some talking and left him un-illuminated.)

How can "data warehouse" described in 1-3 (or a bit more) sentences?

Keeping it stupidly simple, a data warehouse is the central hub for all the data an organization stores in its databases, raw files, CRM, and other apps. Over time, it becomes a unified repository of valuable historical data. That’s why it’s often referred to as the “single source of truth.”

Every data warehouse undergoes a routine extract, transform, load (ETL) process to gather data, clean it, and store it centrally. This data is then used for reporting, analysis, and mining by data analysts and BI managers.

Now you may wonder how it differs from a data lake or a data mart. I’ll go deeper on Data Lake vs Data Warehouse vs Data Mart in a separate blog real soon.

Subscribe to 5X Digest & be among the first to access our latest data insights

The 3-step data warehousing process

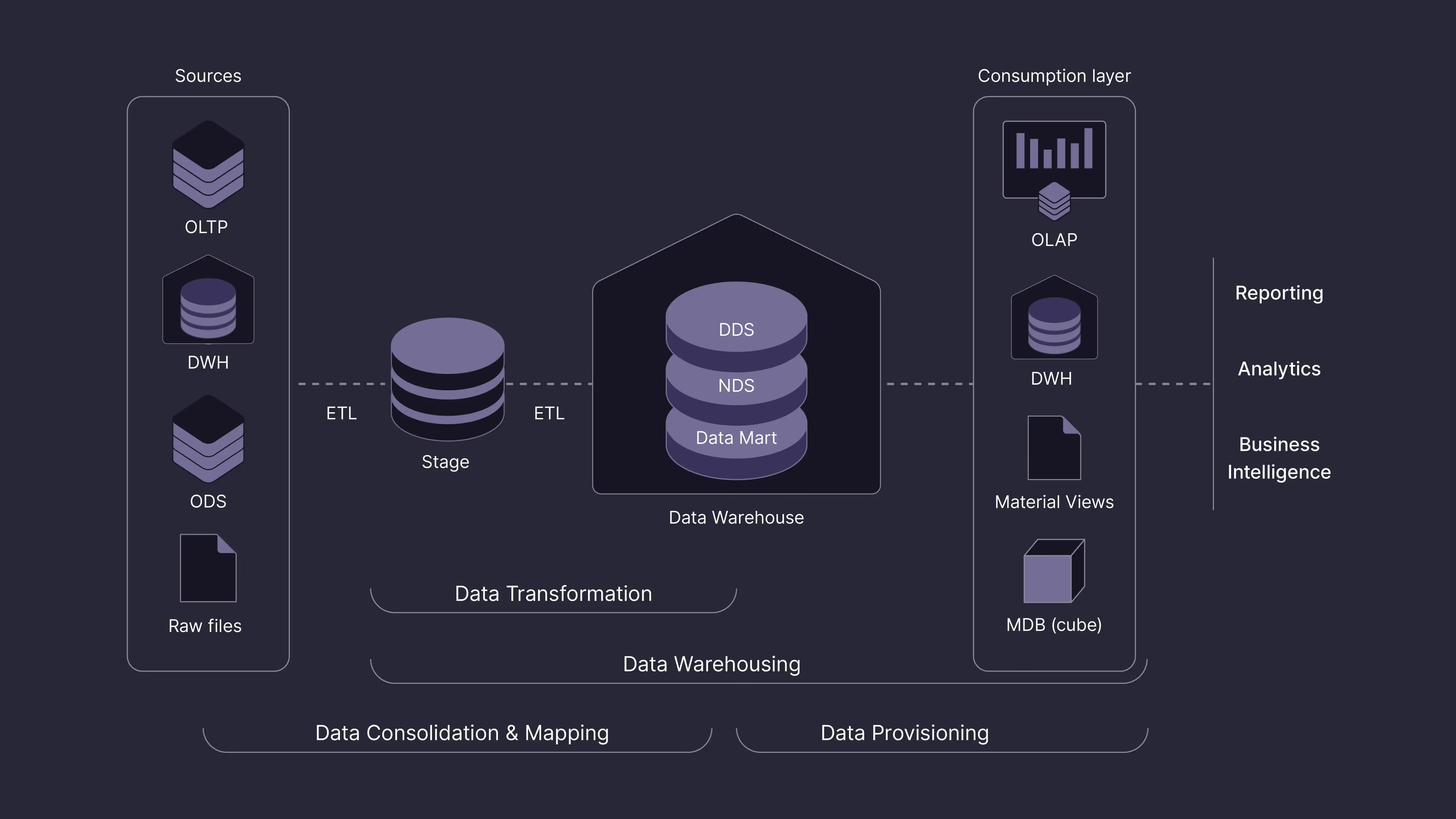

Now that we've got the lowdown on what a data warehouse is, let's jump into how it works and where it slots into the data architecture.

Data sourcing

Data is collected from various sources, including Online Transaction Processing (OLTP), Operational Data Store (ODS), another data warehouse, or raw data files.

Data warehousing

Step 1

Data transformation (Stage)

The collected data undergoes Extract, Transform, Load (ETL) processes and is stored in a staging area. The staging area is an intermediate storage space where raw data is temporarily held and transformed before loading into the data warehouse.

Step 2

Data transformation (Warehouse)

The staged data is then moved from the staging area to a data warehouse. This warehouse may be a dimensional data store, normalized data store, data mart, or other data repository.

Step 3

Data provisioning (Warehouse to Consumption layer)

The transformed data from the data warehouse is provided to the consumption layer. This layer can include Online Analytical Processing (OLAP), another data warehouse, or a multidimensional database (cube).

Data usage

The data from the consumption layer is utilized for reporting, analytics, and business Intelligence (BI).

Here’s a visual representation of the data flow from sources to the consumption layer.

Benefits of using a data warehouse

While there are tons of benefits of using a data warehouse, here are the top three:

Single Source of Truth

A data warehouse consolidates and organizes large amounts of data into a central database for teams to run their analytics on.

Quick decision-making

As data is readily available, a data warehouse makes it easy for stakeholders to access data, speeding up decision-making.

Enhanced data security

In light of growing cyber threats, businesses need to focus on keeping their data safe. Safeguarding data in a central warehouse is relatively straightforward as opposed to several data banks and servers. Most data warehouse solutions provide security features like user groups to control access, slave read-only access, and encryption techniques.

The "slave read-only" access means making a copy of the database that allows only reading, not writing. It helps balance data access, backups, and security by limiting changes to the main database.

Types of data warehouses

Data warehouses are typically grouped under many buckets based on the processing type (real-time vs. batch), storage format (columnar vs. row-based), data structure (relational vs. NOSQL), and more.

However, when selecting a warehouse for your business, you should first evaluate whether you need an on-prem or a cloud-based solution. Here’s what each means:

On-premises data warehouses

On-prem data warehouses, like IBM DB2, Microsoft SQL Server, Vertica, and SAP HANA, are set up in-house. This means the organization handles both the hardware and software.

Ownership and control

With on-premises setup, organizations have total control over everything—hardware, software, and access. Whether rolling with standard or custom servers, the organization keeps hands-on access to the hardware. And if anything goes wonky, the IT team can dive into every software layer to sort things out.

High security

To amp up security, on-prem warehouses rely on encryption, access control lists, and physical security measures. This ensures that sensitive data stays protected, thwarting any unauthorized access, disclosure, or modification.

High availability

On-prem warehouses have backup plans and extra hardware to keep things smooth. If there's a hiccup—like hardware issues, network glitches, or maintenance—the data warehouse easily switches to backups, so the data flow is not interrupted.

High upfront cost

Setting up an on-prem data warehouse costs a pretty penny compared to renting from the cloud. You're dishing out for hardware, software licenses, infrastructure, and skilled personnel. And as tech moves forward, get ready to open your wallet for upgrades, making it a pricier long-term deal.

Maintenance

On-prem data warehouses need regular upkeep to ensure performance and data quality, removing resources from essential business tasks.

Scalability issues

Growing on-prem data warehouses isn't a walk in the park. Businesses might have to invest on new hardware or software, cranking up the costs. Scaling up needs meticulous setup and data spread-out to keep the system running smooth, making it complex and time-consuming.

Cloud-based warehouses

A cloud-based data warehouse is like a virtual storage space for data, but it's not on your regular office servers. Instead, providers like AWS, GCP, or Azure host it in the cloud.

Pay-as-you-go

Cloud-based solutions offer flexible pricing. You don't need to invest upfront in physical servers. Pay only for what you use and avoid spending on idle resources.

Compatibility

Cloud data warehouses smoothly team up with both on-premise and cloud systems. They handle all sorts of data and play nice with BI and ML tools on top cloud providers like AWS, Azure, and GCP.

Ease of use

Cloud data warehouses are a breeze to use! They've got simple interfaces and handy tools for easy setup, configuration, and upkeep.

Highly scalable

Scale on the fly – adjust storage and computing power whenever you need without breaking the bank on new hardware.

Vendor lock-in

Choosing a cloud data warehouse ties you to that provider. Switching can be tricky due to technical bumps and contract intricacies.

Data latency

Things might take a bit longer as your data is outside the organization's network. Cloud services got your back, though, especially those with multi-region support. They stash your data in the regions you fancy, helping cut down on delays.

Questions to ask yourself when deciding on a data warehouse

What’s my budget and the cost structure of the data warehouse?

5X Tip: Data warehouse pricing hinges on storage, compute resources, and features. Select a data warehouse that aligns with your budget and growth plans. To budget wisely:

- assess your storage and processing needs,

- prioritize scalability, and

- balance setup costs with long-term operational expenses.

How’s the performance of the data warehouse?

5X Tip: The performance of a data warehouse is important for ensuring that you can access your data quickly and easily. You should consider the volume of data you need to store, the frequency of queries, and the response time requirements. Assess how well the data warehouse handles queries of varying complexity and data sizes.

Should I go for a vendor that supports massively parallel processing (MPP)?

5X Tip: Opt for a data warehouse that supports MPP, which can seriously boost query execution speed. Look for a platform that can distribute queries simultaneously across multiple nodes or clusters.

Massively Parallel Processing (MPP) is a computing architecture where multiple processors work together to handle large datasets and execute tasks concurrently.

How do I assess security and compliance?

5X Tip: Choose a data warehouse with built-in encryption, authentication, and authorization features to keep your sensitive data safe and sound. Make sure it plays nice with industry regulations like GDPR or HIPAA that your organization follows. Also, check if the provider has security certifications and features like audit trails and monitoring.

How do I weigh the benefits of batch processing against real-time processing?

5X Tip: It depends on your needs. Batch processing is great for handling large volumes of data at once, while real-time processing provides immediate insights. Consider the nature of your data and the speed at which you need results.

What other data integrations do I need?

5X Tip: Your data warehouse is the heart of your data platform, and it integrate with the other tools you're using—whether it's for ingesting data, BI, or anything else. If you're bringing in data directly, having connectors is a must for smooth operations.

What type of data do I need to store?

5X Tip: Think about the mix of data types you're dealing with—structured, semi-structured, relational, or non-relational. Make sure the chosen solution is a good fit for your specific data formats, even handling mixed formats like JSON or unstructured text.

Comparing the top data warehouse tools

Comparison Matrix

Founded at

Headquarter

Cloud Compatibility

Pricing Plans

Pros

Cons

Pricing

Structure

Compute

cost

Scalability

Ease of use

Real-time data processing

Partner ecosystem

Customer support

User access control

Performance

Cost management

Other features

Pricing

Ease of use for power users & developers

Ease of use for business users

Visualizations

Embedded dashboards

Cloud compatibility

Support

Notifications

Mobile friendly

Snowflake

No upfront costs, only pay for queries run on data stored in S3, can be cost-effective, especially for companies dealing with massive datasets and infrequent data analysis needs.

- Medium (0.5x): $8.00

- Large (x): $16.00

- XLarge (2x):$32.00

Separate storage and compute allows you to scale independently of each other

User-friendly SQL interface, little to no learning curve for existing Redshift users. Great for users with or without any coding experience.

Snowflake combined with Apache Kafka can provide cost-efficient near real-time analytics, but requires additional setup

Widest range of data integration partners, and tier 1 database integration for all SaaS tools

Offers community support, a robust knowledge base, diverse support plans, and comprehensive training; yet, standard users may face slow response time depending on the issue.

Discretionary Access Control (DAC) and

Role-Based Access Control (RBAC)

Low latency due to its optimized architecture for data storage and processing.

More hassle-free in most of the scenarios with the automatic partitioning & clustering, column compression, and multi-cluster auto-scaling, preventing spikes in cost due to sub-optimal setup.

It is cheaper for development and testing since you only get charged based on the compute hours.

- Snowpark: Run spark queries

- Snowflake Time Travel: Historical data access

- Object-level access control

- Snowflake Fail-safe: Historical data is protected in the event of a system failure or other event

- Snowsight for account and general management

- SnowSQL (Python-based command line client)

- Snowpipe to load data in micro-batches from internal and external stages.

Google BigQuery

Based on storage and compute consumption. It offers five plans: Free, Storage, Compute, Data ingestion, Data extraction.

- 300 slots (0.5x): $8.22

- 600 slots (1x): $16.44

- 1200 slots (2x): $32.88

Serverless and fully managed architecture allows for seamless scalability, with no resources to provision or manage

GBQ is compatible with GCP services and Google Workspace suite. Intuitive SQL-like interface further simplifies query writing and execution, enhancing overall user experience.

Low-latency replication from relational databases directly to BigQuery for near real-time insights.

Offers almost identical integrations as Snowflake. Integrations with other Google Cloud Platform services, such as Cloud Storage, Cloud Functions, Google Workspace suite, and Data Studio are a plus.

Robust customer support through extensive self-serve options like tutorials, documentation, and community engagement. For personalized assistance, you can choose from the varying support plans.

Granular IAM-based permissions with basic, predefined, and custom roles.

Low latency queries due to its fully managed server when used for high speed analysis in large datasets. Also depends on data size and structure, query complexity, and slot allocation.

Offers flexibility to choose between on-demand and flat-rate pricing.

User-level cost management is a challenge.

Project-level monitoring is available but you can’t tell who is running useful or bad queries. Admin rules to control query execution could bring down costs.

- Duet AI: Natural language chat assistance for real-time guidance on performing specific tasks

- Looker Studio: Built-in BI to create and share insights

- BigQuery geospatial: Enhance your analytics workflows with location intelligence

- Data clean rooms: Create a low-trust collaborative environment without copying or moving the underlying data right within BigQuery.

Amazon Redshift

Pay for storage, compute, and data processed; often high due to advanced processing capabilities. Priced based on DBU, usually 2-5x more expensive than EMR cluster from AWS.

- 3x ra3.4xlarge (0.5x): $9.78

- 5x ra3.4xlarge (1x): $16.30

- 10x ra3.4xlarge (2x): $32.60

Limited within the AWS ecosystem

User-friendly SQL interface, suitable for traditional data engineers.

Can integrate with Kinesis for streaming data but primarily processes data in batch mode in Redshift.

Redshift’s partner ecosystem isn’t as vast as its counterparts. Heavily depending on AWS ecosystem. Note: Some integrations are only available in select AWS Regions.

Offers a ticket-based support system where specialists connect to resolve AWS services issues.

Column Level Access Control and Role-Based Access Control (RBAC)

Higher latency, especially for complex and large-scale processing tasks due to its reliance on S3 for data storage.

Most cost-efficient with the right set up of clusters and proper SQL transformations and queries. Require skilled engineers.

For example, the cost is based on the data scanned and if there is no partitioning column provided on tables, then AWS will scan the entire table and charge $5 per TB of data.

Redshift performance is susceptible to cache misses in the shared query compilation cache.

- Massively Parallel Processing

- Column-oriented data store

- Result caching to deliver sub-second response times for repeat queries

- Automated infrastructure provisioning

- Fault tolerance using data re-replication and node replacement

- Network isolation to restrict network access to organization's cluster using Amazon VPC

Snowflake

Snowflake is a fully-managed cloud-native data platform providing scalable data warehousing, data engineering, and data security solutions.

Founded at

Headquarter

Cloud Compatibility

Pricing Plans

Based on actual storage, compute, and cloud usage

Note: A Snowflake credit is a unit of measure consumed when using resources such as a virtual warehouse, cloud services layer, or serverless features.

Our Recommendation

Use Snowflake if ...

- You need to run unlimited concurrent workloads within seconds

- You prefer scalability features like auto-scaling and auto-suspend

- You’re looking for a fully SQL-based approach

Pros

Large vendor ecosystem that has native integration with more vendors, such as BI/reverse ETL/data observability tools, and other SaaS tools such as ads channels, event tracking tools, and payments.

Auto-scaling capabilities for compute resources offer ease of use in handling varying workloads.

Provides row-level & column-level data governance.

Auto-suspension and auto-resumption features simplify & automate warehouse monitoring & usage based on the workload.

Snowflake has a Partner Connect program where you can choose from different third party tools to integrate, selecting the one that works best for your business.

Cons

The fully-managed nature of Snowflake means users have less customization over the underlying infrastructure.

Need a workaround solution for Google connectors / sources compared to BigQuery.

The Snowflake web interface allows to create and manage Snowflake objects like virtual warehouses and databases, load limited data into tables, execute ad hoc queries and other DDL/DML commands, and view past queries.

User Interface

Intuitive navigation simplifies user experience with easy-to-use menus and navigation.

Clean design with minimum unnecessary elements for a focused and clutter-free workspace.

SQL Editor

You can format SQL queries in Snowsight Worksheets.

Standard SQL support enables users to write queries using standard SQL syntax.

Syntax highlighting of code elements for improved readability.

Auto-complete speeds up query writing by suggesting and completing statements.

Real-time error detection identifies errors as users type, aiding in quick debugging.

Transparent data exploration, aligning with a culture of informed decision-making

Discretionary Access Control (DAC): Every digital entity is assigned an owner. The owner can decide who else is allowed to access or interact with the object.

Role-Based Access Control (RBAC): Permissions are grouped into roles and users are assigned these roles based on their access levels.

Snowflake’s cost management framework is divided into three components:

Visibility: Helps you understand cost sources, get to the core of it, attribute them to the right entities within your org, and monitor them to avoid unnecessary spends. The admin view provides an expensive query view, top warehouses by cost, and cost predictions. Additionally, it offers a very dynamic view of credit usage trends that are customizable. Users can set pulses to track resource usage.

Controls: Kind of extends the purpose of Visibility一control costs by setting limits to, say, how long a query can.

Optimization: Snowflake recommends taking action whenever your Snowflake usage follows any pattern like blocked queries due to transaction locks, copy commands with poor selectivity, single row inserts and fragmented schemas, etc. Check out the recommendations for different usage patterns here.

Pricing

Structure

Compute

cost

Scalability

Ease of use

Real-time data processing

Partner ecosystem

Customer support

User access control

Performance

Cost management

Other features

Pricing

Ease of use for power users & developers

Ease of use for business users

Visualizations

Embedded dashboards

Cloud compatibility

Support

Notifications

Mobile friendly

Google BigQuery

Google BigQuery (GBQ) is a fully managed, serverless cloud data warehouse by Google Cloud Platform (GCP). It is designed for analyzing large datasets in real-time using SQL-like queries.

Founded at

Headquarter

Cloud Compatibility

Pricing Plans

Based on storage and compute consumption

Our Recommendation

Use BigQuery if ...

- You need a highly scalable solution to analyze very large datasets, of the order of petabytes.

- Require quick and responsive query processing for complex analytical tasks.

- Your organization already uses other Google Cloud services or products.

Pros

Advanced query optimization techniques like parallel processing, table partitioning, and columnar storage ensure optimal query performance.

Can handle really large datasets and scale to PB-sized warehouses.

As GBQ is fully-managed, maintenance and infrastructure will be the least of your worries.

Cons

Managing costs associated with large query volumes and data storage in BigQuery can be intricate. It needs vigilant monitoring and optimization.

Since GBQ is a part of GCP, you have to completely rely on GCP for all your data warehousing needs.

While it has integrations within the GCP suite, GBQ offers limited non-GCP integrations compared to Snowflake. Plus, it’s not compatible with non-GCP data warehouses like AWS and Azure.

User Interface

Clean interface that even non-technical users can easily get started. The SQL-like interface makes it easy to write and run queries. Users can retrieve the data they need using simple SQL queries, without having to be a pro at coding.

Intuitive and well-organized service navigation menus, making it easy to locate and access essential features with ease.

SQL Editor

Supports standard SQL syntax, making it accessible for regular SQL users.

The SQL editor uses syntax highlighting for enhanced code readability, making it easy to write and understand queries.

GBQ excels in fast query processing even for complex queries.

Granular permissions: Fine-grained control over access permissions allows admins to specify access at a detailed level.

IAM-based access control: Integration with Identity and Access Management (IAM) ensures secure access control within the broader Google Cloud ecosystem.

Detailed cost breakdown: GBQ offers transparency in its cost structure with a detailed breakdown of data processing costs.

Query cost controls: Users can implement query cost controls by setting quotas and limits to manage and predict spending

Pricing

Structure

Compute

cost

Scalability

Ease of use

Real-time data processing

Partner ecosystem

Customer support

User access control

Performance

Cost management

Other features

Pricing

Ease of use for power users & developers

Ease of use for business users

Visualizations

Embedded dashboards

Cloud compatibility

Support

Notifications

Mobile friendly

Redshift

Amazon Redshift is a fully managed, SQL-based, petabyte-scale cloud data warehouse solution provided by AWS Services.

Founded at

Headquarter

Cloud Compatibility

Pricing Plans

Node-based pricing with options for On-Demand or Reserved models

Our Recommendation

Use Redshift if ...

- You heavily rely on AWS services as a part of your operations.

- Complex analytical queries and large datasets are integral to your business.

- You want to access and analyze data without all of the configurations of a provisioned data warehouse.

- You need a zero-ETL approach to unify data across databases, data lakes, and data warehouses.

Pros

Linear Scaling from GBs to PBs

Familiar SQL language simplifies adoption

Built-in machine learning capabilities

Self-learning and self-tuning capabilities for performance optimization

Integration with Apache Spark to analyze large datasets

High concurrency support

Cons

Requires some manual intervention for certain configurations

Handling high concurrency may lead to performance issues during simultaneous query executions

Deploying Amazon Redshift on non-AWS servers may pose compatibility issues

No real distinction between storage and compute

While Redshift integrates well with other AWS services, ingesting data from external sources can be tedious.

Query performance can be slower than other warehousing solutions

User Interface

Redshift's straightforward SQL interface simplifies data warehouse management, making it accessible to data analysts and SQL developers.

Teams familiar with PostgreSQL can seamlessly transition to Redshift's query engine, as they share the same interface.

Some users complain if the UI could be more developer-friendly.

SQL Editor

Web-based analyst workbench to share, explore, and collaborate on data with teams using SQL in a common notebook interface.

Amazon Q generative SQL allows users to write queries in plain English directly within the query editor and create SQL code recommendations. Note that these features are subject to data access permissions.

Use Query Editor's navigator and visual wizards to browse database objects, create tables, and functions.

Collaborate and share query versions, results, and charts effortlessly with automatic version management in the query editor.

Amazon Redshift provides service-specific resources, actions, and condition context keys for IAM permission policies.

The account admin can attach permission policies to IAM identities (users, groups, roles) and services like AWS Lambda.

The admin gets to decide who gets the permissions, the resources they get access to, and the specific actions to allow on those resources.

Learn more about IAM access control here.

Adjust nodes based on actual usage.

Downsize during low demand for cost savings.

Purchase Reserved Instances (RI) for predictable workloads.

Optimize COPY command for smart data loading.

Implement efficient compression and distribution strategies.

Pricing

Structure

Compute

cost

Scalability

Ease of use

Real-time data processing

Partner ecosystem

Customer support

User access control

Performance

Cost management

Other features

Pricing

Ease of use for power users & developers

Ease of use for business users

Visualizations

Embedded dashboards

Cloud compatibility

Support

Notifications

Mobile friendly

Can’t make up your mind on the right warehouse?

Book a free consultation for personalized assistance!

Future trends in data warehouses

Augmented analytics

In simple language, augmented analytics means making data analysis a breeze for everyone by automating insights, improving data exploration, and offering user-friendly interfaces.

In tech speak - Augmented analytics in data warehouses amps up data analysis with machine learning and natural language processing.

Here's what we expect in this space:

- More automation in data preparation tasks

- Smarter query optimization

- Better NLP to reach more skill levels

- Putting the spotlight on human-friendly design for easy-to-use tools

- Tighter teamwork with AI for predicting, spotting anomalies, and digging into advanced insights

Serverless data warehousing

Serverless data warehousing simplifies data management without the burden of server upkeep, promoting flexibility and efficiency in analytics.

Serverless data warehousing is getting slicker, more adaptable, and full of innovation. With continuous strides in cloud tech, serverless architectures, and analytics, this field's set to keep booming. Here's the lowdown:

- Expect to see serverless data pipelines, handling data from start to finish without the fuss of managing infrastructure.

- Look out for clever auto-scaling algorithms predicting and adjusting resources based on workload changes.

- Watch for the rise of multi-cloud orchestration—it lets organizations smoothly use serverless data warehousing across different cloud solutions, ensuring seamless data management.

AI-assisted ETL

As AI tools become more sophisticated, AI-assisted ETL is gaining popularity. It helps automate routine tasks and lowers the risk of human errors.

AI in data warehousing is gearing up to make things more automated, handling real-time data and throwing in some predictive analytics. Here’s my two ounces:

- AI-boosted self-service ETL will make data integration a breeze for teams. More solutions are already on it.

- As AI keeps getting smarter, it looks like we can expect smooth, context-aware transformations in different data environments—like databases, cloud services, on-prem systems, and the like.

-

Context-aware transformations in AI-assisted ETL mean the system intelligently adapts data processing based on specific characteristics, business rules, user preferences, real-time conditions, data variability, and historical trends.

How 5X can help you procure and implement a data warehouse

Assessing needs for best-fit vendor recommendations

Choosing the perfect data warehouse vendor can be tricky. Businesses often struggle to find the right tool that perfectly suits their needs.

We get it – it's a maze. Once we dig into your business, checking out your needs, data sources, and security requirements, we'll point you to a tool that fits your budget like a glove.

Creating proof of concepts with your actual data

Building proof of concepts for selected tools is usually a big resource drain, taking 2-3 months and a ton of data team effort.

Don't worry, we make things faster without any hiccups. We create proof of concepts in under two weeks using your actual data. Rigorous testing ensures the chosen vendors not only meet but exceed expectations in key areas like query speeds, data processing, and scalability.

Ensuring best practice

Creating functional data pipelines is no walk in the park for businesses. Setting up the intricate web while sticking to best practices can be quite the challenge.

That's where we come in. 5X Services, our consultancy, can lend a hand in setting up your data pipelines, following the best practices, and running checks to guarantee top-notch data quality and integrity.

Streamlined negotiations and contract handling

Integrating data warehouse vendors with tools is often a headache, taking up precious time and distracting from crucial analytics efforts.

With 1-click onboarding on the 5X platform, all that manual work and upkeep vanishes, so you can put analytics first. The new warehouse vendor easily syncs up with data modeling and ingestion vendors via APIs.

Seamless integration with the rest of your data stack

Integrating data warehouse vendors with tools is often a headache, taking up precious time and distracting from crucial analytics efforts.

With 1-click onboarding on the 5X platform, all that manual work and upkeep vanishes, so you can put analytics first. The new warehouse vendor easily syncs up with data modeling and ingestion vendors via APIs.

Unified billing, user management, and insights

Managing invoices from multiple vendors can be a headache, throwing your finances off balance and adding extra tasks for data teams, stealing time from insights.

On 5X, your data warehouse vendors are on a single monthly bill, streamlining finances. Manage users, monitor usage, and centralize data hassle-free. Let your data team focus on insights rather than infrastructure.

Remove the hassle of buying & implementing a warehouse.

Speak to us!

Table of Contents

Continue Exploring