Data Reliability Guide: How To Build Trust In Your Data Pipelines And Eliminate Costly Errors

.png)

Table of Contents

TL; DR

- Data reliability protects you from making big decisions based on the wrong data

- Data reliability means your data stays accurate, consistent, complete, and timely

- Reliable data turns dashboards into trusted decision-making tools

- Most data breaks because of human errors, schema changes, bad integrations, silos, and lack of a proper monitoring mechanism

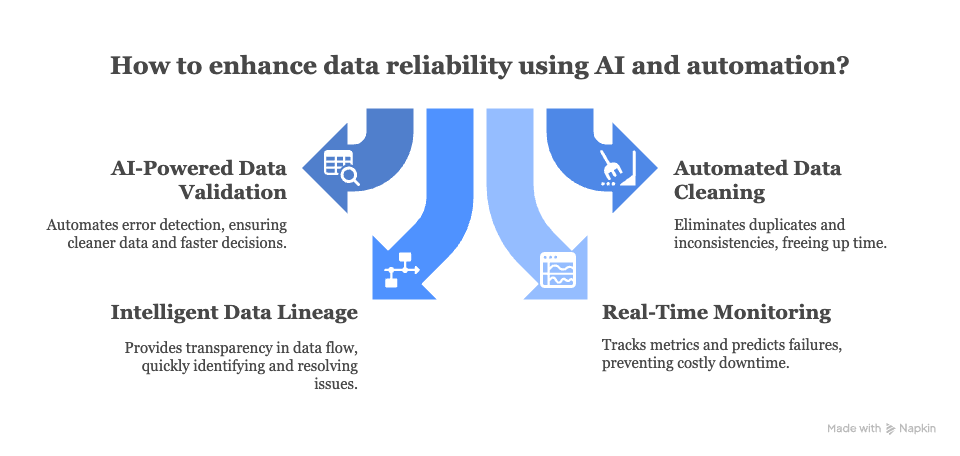

- AI automates validation, cleaning, lineage, and real-time anomaly detection

- Data observability tools help teams catch issues proactively

- Organizations must build a system where data is consistently trustworthy, teams make decisions with confidence, and bad data never blindsides you again

For founders and top executives, the only thing worse than having no data is making expensive business decisions based on the wrong unreliable data.

Every business leader has faced that moment when every decision feels shaky. That’s the quiet and costly ripple effect of unreliable data. In today’s world, where every forecast, customer journey, automation, and executive decision rides on information, data reliability is no longer optional.

Read this blog to understand data reliability, why it matters, and how to create it for your business.

Why causes data to break?

If you’ve ever opened a dashboard and thought, “That number can’t be right,” you’ve witnessed data break in action. It happens quietly, often without warning, and before you know it, entire teams are making decisions on inaccurate or incomplete data.

Here’s what causes your data to break:

- Human error: When teams still rely on manual data handling, even the smallest oversight can happen through pipelines, reports, and business logic. Common examples include something as minor as a missing comma in a CSV file, an incorrect data type in a spreadsheet, or a hastily updated schema

- Schema changes: Data pipelines depend on consistent structure. When a product team adds a new column, renames a field, or changes a format in the source system, it can instantly break downstream processes

- Bad integrations: Modern data stacks pull from multiple sources including CRMs, ad platforms, billing tools, web data analytics, and more. But when integrations aren’t well maintained, data gets lost in translation. APIs fail, connectors time out, and mismatched formats lead to partial or corrupted loads

- Outdated or incomplete data: When datasets aren’t refreshed frequently or fail mid-pipeline, you end up making decisions based on outdated insights

- Data silos: When marketing, product, and finance teams maintain separate versions of truth, inconsistencies are inevitable. This means that when an organization has different systems, metrics, and definitions, it may lead to conflicting reports

- Lack of monitoring and ownership: Many data teams fix issues only after something breaks. But without clear ownership and automated monitoring, problems can linger undetected for weeks. In short, data breaks when no one is accountable for keeping it whole

What is data reliability?

Data reliability is when you can trust your data every single time you use it.

Reliable data stays accurate, consistent, and complete over time, no matter how many systems it travels through or how many people touch it. It’s what separates a one-time “good dashboard” from an organization that makes confident, data-driven decisions every day.

In most cases, you need to define what you mean by 'reliable' in a sufficiently precise way that calculations can be done to compare your notion of reliability.

A reliable dataset is one that was collected with as little bias as possible, that is sampled in a way that is close enough to random. It will depend on the context of what the dataset is (medical, longitudinal, 2 sample, etc) on what the potential sources of bias could be, but that’s the main idea of reliable data.

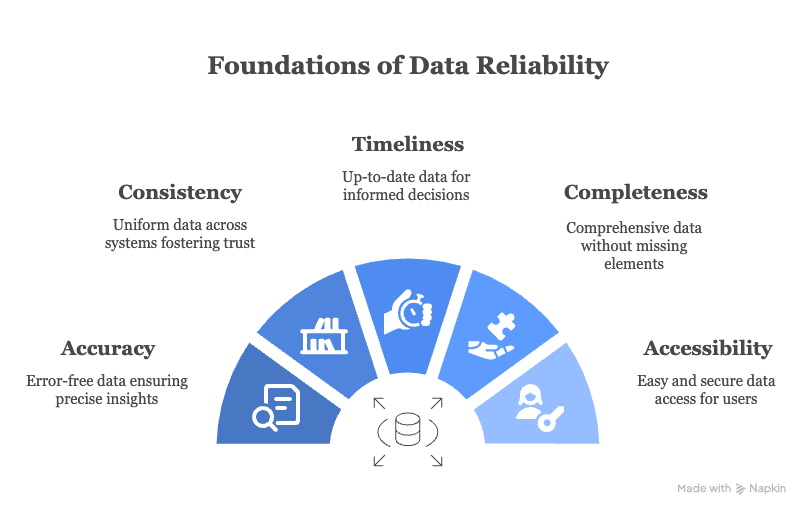

What are the features of data reliability?

Here’s what reliable data actually looks like in practice:

- Accurate: Reliable data is error-free. If a sales forecast is off by just 3%, that tiny mismatch can distort supply chain planning or revenue projections. Accuracy comes from constant validation, audits, and automated error detection, not one-off cleanups.

- Consistent: Whether a customer’s name appears in your CRM, billing, or support system, it should mean the same thing everywhere. When data conflicts across departments, trust breaks down. Consistency ensures everyone’s reading from the same playbook

- Timely: Even perfect data loses value if it’s stale. Reliable data is updated continuously so leaders make decisions based on today’s facts, not last quarter’s snapshots. Automated pipelines and real-time syncs make this possible

- Complete: Missing data is silent damage as it skews insights without you noticing. A reliable system ensures no missing fields, incomplete records, or gaps in lineage

- Accessible: Reliability also means usability. The right people should have quick, secure access to data without bottlenecks or dependency on IT. Cloud-native architectures make this seamless

Business benefits of data reliability: Why is it important?

Data reliability separates businesses that think they’re data-driven from those that actually are.

Here’s why it matters so much:

- Smarter business decisions: Every strategy, forecast, or investment plan relies on data. But if that data from your business intelligence is unreliable, your decisions would cost your business. Reliable data gives leaders confidence that they’re acting on truth, not assumption. It helps optimize operations, spot risks early, and scale decisions with precision.

- Better AI and machine learning outcomes: AI is only as smart as the data it learns from. Feed your models inconsistent or biased data, and you’ll get distorted predictions for credit scoring, customer churn, or fraud detection. Reliable data ensures your models improves accuracy, reduces bias, and helps your AI deliver insights you can trust

- Staying compliant and audit-ready: In industries like healthcare, finance, or cybersecurity, unreliable data is illegal. Regulations like GDPR and HIPAA demand complete, accurate, and traceable data. A strong reliability framework keeps your organization audit-ready and protects you from hefty fines or reputational damage

- Protecting customer trust: Nothing erodes trust faster than bad data such as wrong invoices, duplicate charges, or emails sent to the wrong person. Reliable data ensures every interaction feels seamless and personalized. When customers see accuracy and consistency, they see professionalism and that builds long-term loyalty

What are some data reliability frameworks?

How do you measure data reliability?

How do you ensure data reliability?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

Get notified when a new article is released

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)