An Enterprise Guide To Building RAG-based LLM Applications for Production

Table of Contents

TL; DR

If you're keen to build large language model (LLM) apps using Retrieval-Augmented Generation (RAG), the quality of your data is everything.

Your AI is only as good as the information you feed it. Yet many teams skip or rush data preparation, only to face hallucinations, slow responses, inconsistent answers, or compromised performance.

Read this guide to explore how you can prepare your data for RAG and LLM apps, step by step.

Whether you’re building internal copilots, smarter chatbots, or research assistants, RAG is the missing link between memory and intelligence. Instead of hallucinating facts, RAG pulls in verified, up-to-date knowledge, making AI smarter, more reliable, and enterprise-ready.

- Aniket Kumar Singh, SRE II, JP Morgan

LinkedIn Post

Understanding RAG

Retrieval-Augmented Generation (RAG) is a powerful framework that combines the best of traditional information retrieval and generative language models. RAG systems rely on what a model already knows (which is limited to its training data) while actively fetching relevant information from external data sources like documents, websites, or databases in real-time.

Here's how it works:

- When a user asks a question, a retriever component first searches a knowledge base to find the most relevant documents or chunks of information

- Next, these documents are then passed to a language model, which generates a final answer using both its trained knowledge and the retrieved content

This process makes RAG systems especially useful for building LLM applications that require up-to-date, domain-specific, or context-aware responses.

RAG is increasingly being used in customer support chatbots, legal and healthcare tools, enterprise search, and other applications where accurate, source-grounded information is critical. But the performance of RAG hinges heavily on one thing: the quality and structure of the underlying data.

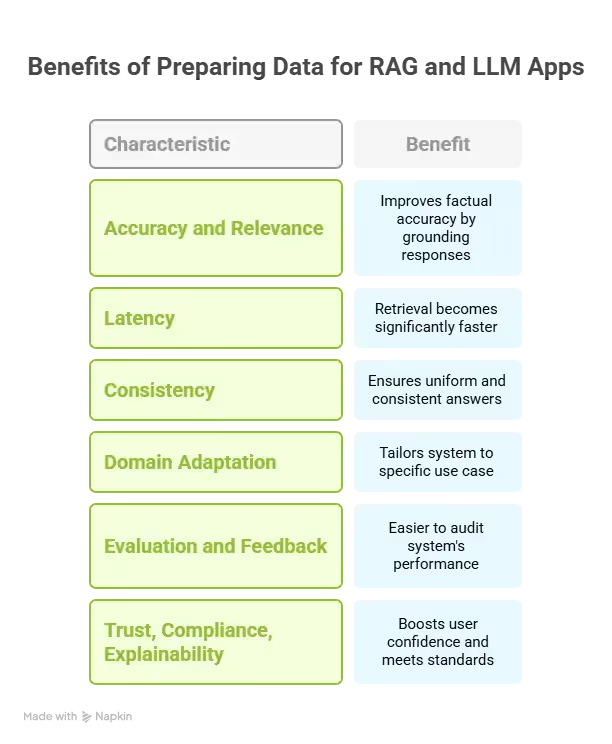

Benefits of preparing data for RAG and LLM apps

Before diving into the “how,” let’s understand the “why.” Many teams jump straight into model selection and prompt tuning, but neglecting the data layer can seriously impact performance. Proper data preparation is the single most impactful way to improve the output quality of Retrieval-Augmented Generation (RAG) systems.

Here’s what you gain when you invest time in preparing your data the right way:

1. Improves accuracy and relevance

The goal of RAG is to reduce hallucinations and increase factual accuracy by grounding responses in your own data. But if that data is messy or disorganized, even the most powerful retrievers won’t find the right content.

Example: Imagine a legal chatbot built for a law firm. If legal contracts and case summaries are stored as large PDFs without sectioning, the retriever might return a 20-page document when the user really needs a single clause. But if you chunk the document by clause or section and label each with metadata like case type or jurisdiction, the retriever can deliver precise context, and the LLM generates a relevant, accurate answer.

2. Reduces latency

RAG systems are only as fast as their slowest component. When your data is well-chunked and indexed, retrieval becomes significantly faster especially at scale.

Example: In customer support applications, users expect instant answers. If your knowledge base is optimized with 200-word chunks stored in a fast vector database like Pinecone or Weaviate, your system can respond in under a second. On the flip side, if you're using 10,000-word blobs or PDF scans, the retriever may slow down or time out, frustrating users.

3. Ensures consistency

Users expect consistent answers to similar questions. That’s only possible when the underlying data is structured and uniform. Inconsistencies—like outdated versions of documents or duplicates—can confuse both the retriever and the LLM, leading to conflicting outputs.

Example: Say you're building an internal HR assistant for a large company. If some documents use “Casual Leave” and others call it “Time-Off Leave,” and the definitions vary slightly across departments, your AI might give different answers depending on the chunk it finds first. By standardizing terminology and cleaning legacy data, you ensure the assistant delivers consistent policies and avoids legal risk.

4. Enhances domain adaptation

Generic LLMs often underperform in niche domains. Feeding them with structured, domain-specific data helps bridge that gap and tailors the system to your exact use case.

Example: In the medical field, accuracy is non-negotiable. A RAG-powered symptom checker trained on general medical knowledge might confuse two similar conditions. But if you feed it cleaned, chunked clinical notes, diagnostic guidelines, and hospital protocols, it can deliver much more precise recommendations tailored to your institution’s practices.

5. Enables better evaluation and feedback loops

Structured data makes it easier to audit your system’s performance. You can trace which chunk was retrieved, what metadata it carried, and how it influenced the final output. This transparency is crucial for debugging and continuous improvement.

Example: Let’s say your fintech chatbot gave the wrong interest rate for a loan product. With properly indexed data and metadata like “source: 2023 Loan Policy Document,” you can trace the exact chunk used and fix the outdated information. This audit trail also helps in setting up reinforcement learning or human-in-the-loop review processes to improve over time.

6. Boosts trust, compliance, and explainability

In enterprise settings, especially in regulated industries, users need to trust where the answer came from. Structured and well-prepared data makes source attribution easy, which boosts user confidence and meets compliance standards.

Example: For a financial services app, being able to say “This answer is based on the latest RBI circular, published in March 2024” can make the difference between user adoption and regulatory rejection. Good metadata tagging and chunk-level citations help enable this level of explainability.

Steps to prepare and build data for RAG and LLM apps

When building RAG or LLM-based applications, teams often obsess over which model to use or how to tweak prompts. In reality, the quality and structure of your database plays a more crucial role.

A well-prepared database doesn’t just help with better retrieval, it empowers your AI to think, reason, and respond like an expert in your domain. This preparation bridges the gap between raw, messy enterprise data and usable, high-signal context for the LLM.

Here’s how you can prepare your database for high-performance RAG applications to turn chaotic documents, emails, PDFs, wikis, and spreadsheets into structured intelligence:

Step 1: Data collection

Start by identifying all relevant internal and external data sources that your application should draw from. The goal here is coverage and credibility. Avoid bringing in irrelevant or outdated data as it can introduce noise and reduce model accuracy.

Make sure the data is domain-specific and relevant to your users' needs.

Examples:

- For a customer support assistant: help docs, product manuals, ticket logs, and FAQs.

- For a legal assistant: contracts, case summaries, laws, and firm-specific interpretations.

- For a sales copilot: CRM notes, pitch decks, email conversations, and case studies.

Step 2: Clean and normalize the content

Raw data is rarely clean. You’ll likely deal with duplicates, inconsistent formats, outdated versions, or canned PDFs and OCR errors. So as part of the next step, clean your data.

Cleaning is an overlooked but important step. Here's what you can do:

- Remove irrelevant data: Filter out headers, footers, navigation menus

- Fix formatting issues: Standardize inconsistent fonts, encodings, or file types

- Correct errors: Fix typos, grammar, or corrupted text that might confuse the model

- Handle duplicates: Remove or merge duplicate entries

Step 3: Chunking the data

Chunking means breaking down large documents into smaller, semantically meaningful units. This is critical because most vector databases store and retrieve at the chunk level.

Best practices for chunking:

- Keep chunks between 100 to 300 words to balance context and retrievability

- Chunk based on semantic structure, not just character length by using headings, paragraphs, or logical sections

For example if you’re an HR working on an employee handbook, break it down into chunks like “Leave Policy,” “Code of Conduct,” and “Remote Work Guidelines” rather than random 200-word blocks. This improves the precision of retrieved content.

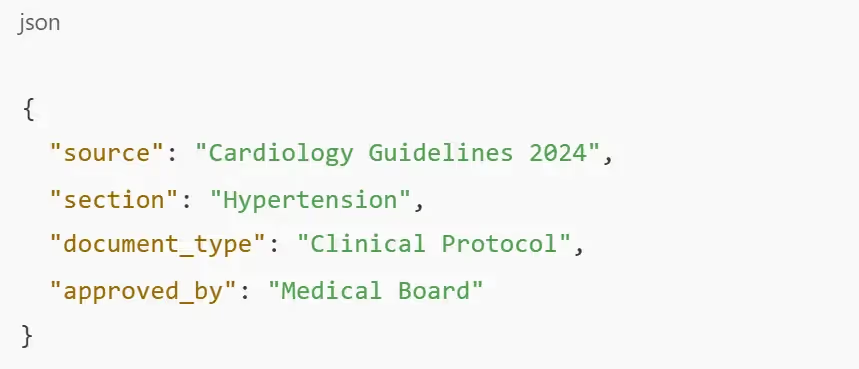

Step 4: Add metadata for contextual filtering

Metadata acts like a map for your retriever. It helps narrow down the right information faster and more accurately. Common metadata includes:

- Title

- Author

- Date created

- Category or topic

- Source URL or file path

For example, in a healthcare chatbot, a medical guideline might have metadata like:

At query time, you can use filters to restrict retrieval to “latest policies” or “only engineering content”.

Step 5: Embed the chunks into a vector store

Now comes the step where your data becomes searchable via semantic meaning. Use an embedding model to convert chunks into vector representations.

Then store them in a vector database like:

- Pinecone or Weaviate (for enterprise scalability)

- FAISS (for local prototyping)

- Chroma or Qdrant (open-source options)

For the best results, always choose an embedding model that aligns with your domain. For financial or legal content, domain-tuned embeddings offer better relevance than generic ones.

Step 6: Optimize your retrieval pipeline

Once your chunks are embedded and stored, you need to fine-tune the retrieval process itself.

For example, for an internal search assistant, you might first retrieve the top 20 chunks based on vector similarity, rerank them using a cross-encoder model, and finally send the top 3 to the LLM.

Key tactics you can explore include:

- Hybrid search: Combine vector similarity with keyword or metadata filters

- Reranking: Use models like Cohere Rerank or BGE Reranker to re-order the top N retrieved results based on relevance

- Context window optimization: Select only the most relevant few chunks to pass to the LLM, instead of flooding it with all top 10 results

Step 7: Set up feedback loops and continuous evaluation

No data pipeline is perfect on Day 1. Set up mechanisms to monitor how well your data supports the LLM’s answers. You can use tools like TruLens, Langfuse, or custom logging to trace each user query through the retriever and the LLM output. Then update or add missing chunks based on user feedback or retrieval failures.

Here are some things you must track:

- Precision & recall of retrieved chunks

- Cases where no relevant chunk was retrieved

- Incorrect or hallucinated responses

To conclude, great LLMs are built using great data

RAG and LLM apps represent the future of intelligent automation, but only if they're built on a solid foundation of well-prepared data. When you clean, structure, and organize your content the right way, you give your AI systems the context they need to answer accurately, quickly, and reliably.

The process from chunking documents to embedding them into a vector database isn’t just a technical checklist. It’s a strategy that directly impacts user satisfaction, system performance, and long-term scalability. As more companies race to deploy generative AI, those with clean, searchable, and high-quality data will have a clear edge.

Take the time to prepare your data right, and your RAG pipeline will return the favor with results that are intelligent, explainable, and aligned with your business goals. Expedite this journey with 5X.

FAQs

What is Retrieval-Augmented Generation (RAG)?

Why is data preparation crucial for RAG and LLM applications?

What are the common challenges in preparing data for RAG and LLM applications?

How does 5X assist in data preparation for RAG and LLM applications?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)