Step-by-Step Guide: How Enterprises Can Connect LLMs to Their Data

Table of Contents

TL; DR

You’ve invested in an LLM. Maybe more than one. The models work. But when it comes to your internal data, results fall apart.

Answers are incomplete. Context is missing. Hallucinations show up in places that should be facts.

Most teams assume the model is the problem. In reality, the failure point is upstream—your data isn’t reaching the model the way it should.

The fix is infrastructure.

“If you want answers from a GPT or LLM, you have to talk to it like a human being, like you're talking to a data scientist. But you have to give it all of the information that you want it to have.”

~ Kshitij Kumar, ex-Chief Data Officer, Haleon

Empowering thousands with data: Haleon’s data literacy journey

This guide is for teams who’ve already tried the quick hacks. It’s for those who need a real pipeline—one that integrates, secures, and orchestrates data for RAG and enterprise-scale LLM deployments.

If that’s where you are, this is how you move forward.

3 Methods for enterprise data integration with LLM

There are multiple routes to integrating your data with your enterprise LLM: training from scratch, fine-tuning, or retrieval-augmented generation (RAG). But how do you decide which path is best?

Each data integration method has its strengths, quirks, and hidden pitfalls. Let's dive into these choices clearly to find the perfect fit for your enterprise's journey.

Method 1: Training an LLM from scratch

Training your own LLM from scratch sounds great in theory. You control everything. Every detail, every dataset, and every decision. But the reality is far from it.

When training from scratch might make sense:

- Your data is ultra-specialized: If your enterprise deals with extremely niche areas, like specialized pharmaceutical research, proprietary legal processes, or classified security protocols, a generic LLM trained on internet data might do more harm than good.

- You want complete control and transparency: Some enterprises, especially those in regulated industries like finance or healthcare, might prefer full ownership and transparency of every parameter in the model

However, this approach comes with steep caveats:

- Resource-intensive and expensive: Building a large model from scratch can easily cost millions of dollars. You're not just buying computational resources; you're committing extensive human capital for managing infrastructure, data engineering, and ongoing optimization

- Time-consuming: Forget agility. Training from scratch can take months, sometimes years, before seeing practical results

- Risk of inefficiency: Publicly available pre-trained models (like GPT-4) already encapsulate general language knowledge that's costly to replicate. Starting from nothing means you're doing a massive duplication of effort

Method 2: Fine-tuning an LLM

Fine-tuning involves adapting a pre-trained LLM to your specific domain by training it further on your proprietary data. This approach is beneficial when:

- Your domain is highly specialized: For instance, legal, medical, or technical fields where terminology and context are unique

- You require consistent outputs: Tasks like code generation or specific formatting benefit from fine-tuning

- Data is relatively static: If your internal knowledge doesn't change frequently, fine-tuning can be effective

However, fine-tuning has its drawbacks:

- High cost and complexity: It demands significant computational resources and expertise

- Not ideal for dynamic data: Frequent changes in your data require continuous re-training, which is resource-intensive

- Risk of overfitting: The model might become too tailored to your data, reducing its generalization capabilities

Method 3 (Recommended): Retrieval-augmented generation (RAG)

Retrieval-Augmented Generation (RAG) is the method that makes the most business and technical sense.

RAG enhances LLMs by allowing them to fetch relevant information from external data sources at query time. This offers several advantages:

- Dynamic data integration: RAG can access the most up-to-date information from wikis, Slack threads, CRM logs, support tickets, policy docs, without retraining the model

- Cost-effective: Fine-tuning costs time and compute. RAG doesn’t. You don’t retrain the model, you don’t need specialized ML ops infrastructure, and you can ship pilots fast, sometimes in weeks

- Improved accuracy: By grounding responses in real-time data, RAG minimizes hallucinations

- Fits your architecture: You can plug RAG into your existing stack with tools like 5X to manage ingestion, transformation, vectorization, and access control

When building your RAG platform, consider the following best practices:

- Effective chunking: Break down documents into meaningful sections to improve retrieval accuracy

- Metadata tagging: Enhance searchability by tagging data with relevant metadata

Also read: How to Build a Custom GPT with Your Enterprise Data

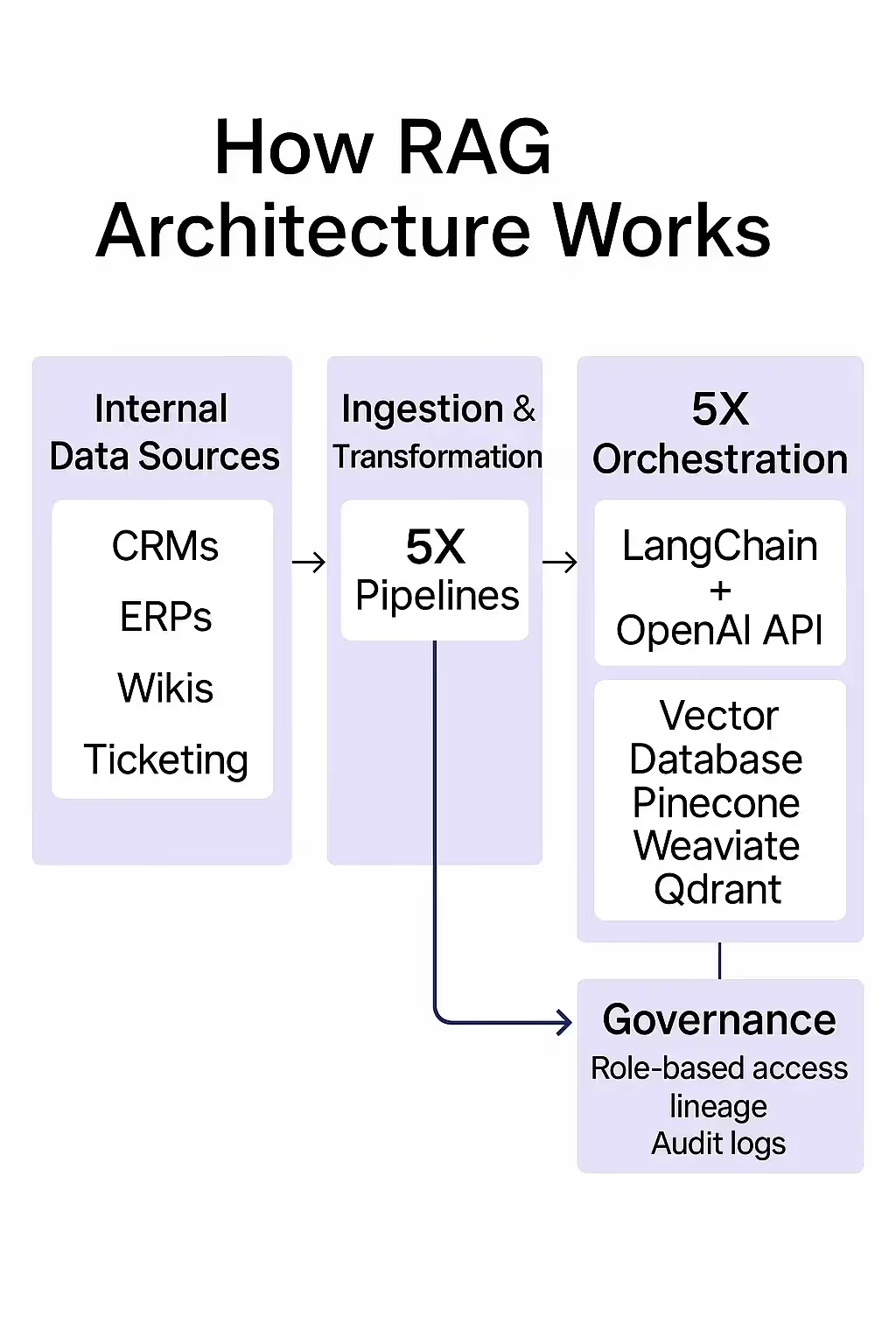

How a RAG architecture works (and where 5X fits in)

RAG isn't a plugin; it’s a pipeline.

If you want to move fast without breaking enterprise data governance, you need more than a clever LLM call. You need structured data ingestion, transformation, search, retrieval, and control.

Here’s how the RAG stack actually works in an enterprise environment. And how 5X becomes the backbone.

The ideal stack for enterprise RAG

Think of this as the minimum viable architecture for running RAG at scale inside an enterprise.

Data sources:

- CRMs (Salesforce, HubSpot)

- ERPs (SAP, Oracle)

- Wikis (Confluence, Notion)

- Ticketing systems (Zendesk, Jira)

- Share drives, contracts, logs, even emails

Ingestion and transformation:

- Use 500+ connectors in 5X to connect and extract from all structured and unstructured systems

- Apply transformation logic: normalize formats, clean data, apply tagging

- Real-time updates or scheduled syncs via orchestrated jobs

Orchestration layer:

- Managed inside 5X

- Pushes clean data to vector databases

- Coordinates calls between data stores, LangChain workflows, and your LLM endpoints (OpenAI, Azure OpenAI, Claude, etc.)

Storage layer (vector DBs):

- Pinecone, Weaviate, Qdrant, whatever fits your retrieval use case

- Stores embeddings and metadata-rich chunks indexed by relevance

Query + generation:

- When a user asks a question, LangChain routes it to the right retrieval source

- The LLM pulls answers grounded in internal content

- Optionally, 5X can log every request and response for auditing

Governance:

- Built-in with 5X

- Lineage across ingestion → retrieval

- Role-based access

- Usage monitoring

- Field-level controls

Also read: Best Data Governance Tools in 2025

Why you need more than just vector search

Vector search alone gives you recall. It doesn’t give you control.

Enterprises deal with:

- Structured + unstructured data in the same conversation

- Constantly changing sources

- Legal, privacy, and compliance requirements

This means:

- You need metadata-aware chunking (not just splitting PDFs)

- You need to link related records—a contract clause and its SLA in the same response

- You need updates that happen via APIs, not manual retraining or re-uploading files

Batch jobs won't cut it. Context gets stale. Compliance gets risky.

That’s why 5X matters.

5X is the architecture you need to make RAG work at scale:

- Unified ingestion: CRMs, ERPs, ticketing, SharePoint—pipe them all in

- Orchestration that runs cleanly: manage workflows, not Python scripts

- Transformation built-in: format, enrich, and tag data before it hits your vector DB

- Governance baked in: see every step from source to LLM answer

You don’t need to duct-tape 6 tools together. You need a single platform where everything—ingestion, transformation, lineage, scheduling, and access—already works.

That’s what 5X delivers.

If your LLM needs to be fast, accurate, and enterprise-ready, this is your stack.

This is your control layer.

Why connect enterprise data to LLMs?

Your enterprise LLM, impressive though it might sound, is essentially a highly sophisticated parrot. A "stochastic parrot," to be precise—a phrase coined brilliantly by Emily M. Bender and her colleagues in their seminal paper:

“An LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.”

~ Emily M. Bender et al., ACM FAccT, 2021

In other words, your LLM is fantastic at mimicking human language, but it has no inherent grasp of your company's jargon, workflows, or nuances, unless, of course, you explicitly feed that knowledge into it.

You've got the kind of questions only your own internal documents can answer, like contracts, Slack conversations, customer tickets, and the elusive "tribal knowledge" hiding in company wikis. Without integrating this data, asking your enterprise LLM anything specific becomes akin to chatting with a very articulate stranger who doesn't quite understand what you're talking about.

The value of integrating enterprise data is clear when you consider that roughly 80% of data is unstructured. Emails, PDFs, Slack conversations, CRMs, support tickets, all this vital business intelligence stays untapped if not integrated correctly.

Connecting enterprise data to your LLMs transforms these “smart parrots” into strategic copilots, helping teams:

- Access real-time, company-specific insights

- Make accurate and informed decisions

- Respond rapidly and confidently to complex queries

An “Enterprise LLM” without real enterprise data is just another expensive toy. But give it context and it evolves into an intelligence tool you can't afford to ignore.

Also read: AI Data Integration Guide: Definition, Benefits, and Use Cases in 2024

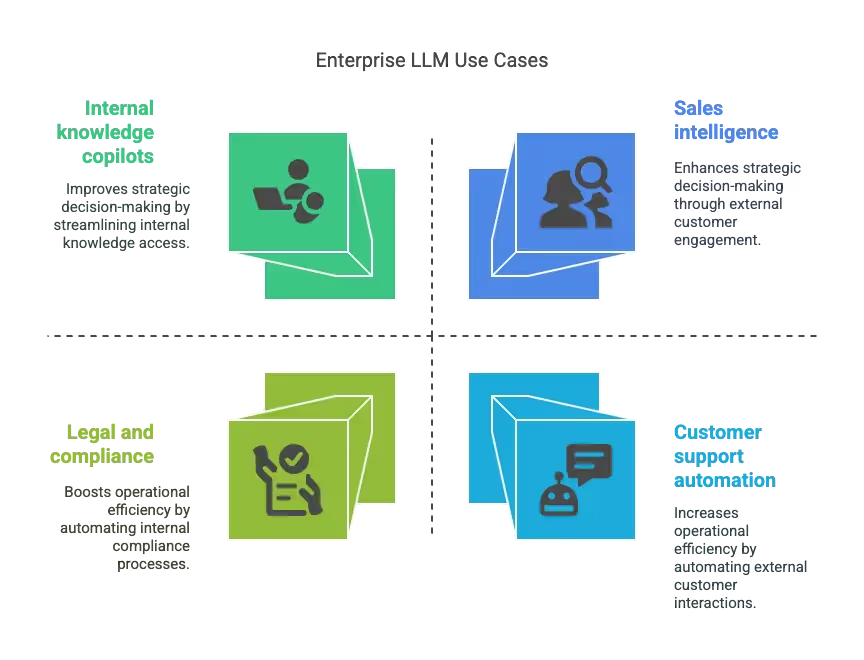

4 Common use-cases for enterprise LLMs

Here are four high-leverage use cases where enterprises rebuilt how knowledge flows through their systems.

1. Internal knowledge copilots

Enterprises are replacing traditional document searches with chat-based interfaces powered by RAG systems. These systems allow employees to query internal knowledge bases conversationally, improving efficiency and accessibility.

Shorenstein Properties, a real estate investment firm, implemented a RAG-based AI system to automate file tagging and organize data more efficiently. This system enables employees to interact with internal documents through a chat interface, streamlining access to company information.

2. Customer support and help desk automation

LLMs are streamlining customer service by automating responses to common inquiries and assisting support agents with real-time information retrieval.

DoorDash developed a RAG-based support system that integrates with their customer service platform. This system uses an LLM Judge to assess chatbot performance across various metrics, enhancing the quality and relevance of responses provided to customers.

3. Legal and compliance

Legal departments are utilizing RAG systems to expedite contract reviews, extract relevant clauses, and ensure compliance with policies and regulations.

4. Sales intelligence

Sales teams are employing RAG systems to summarize account information, track opportunities, and analyze communication histories, enhancing decision-making and customer engagement.

Grainger, a major distributor of maintenance, repair, and operations supplies, implemented a RAG system to manage their extensive product catalog. This system helps sales representatives quickly access summarized product information and respond to customer inquiries more effectively.

Also read: How Data Quality Impacts Your Business

Get started with secure enterprise LLMs using 5X

Most enterprise LLM projects stall for one reason: messy data. Inconsistent formats. Siloed systems. No clear lineage.

5X fixes that.

It gives you one platform to centralize, transform, and route your internal data securely to any LLM you choose.

Whether you're building:

- An internal knowledge copilot

- A customer support agent

- A real-time sales intelligence tool

If you want answers you can trust, this is where you start.

FAQs

What’s the best approach for enterprises: fine-tuning or retrieval-based?

Do I need a vector database to connect LLMs with enterprise data?

How do I govern data access in RAG workflows?

What’s the fastest way to get started?

Building a data platform doesn’t have to be hectic. Spending over four months and 20% dev time just to set up your data platform is ridiculous. Make 5X your data partner with faster setups, lower upfront costs, and 0% dev time. Let your data engineering team focus on actioning insights, not building infrastructure ;)

Book a free consultationHere are some next steps you can take:

- Want to see it in action? Request a free demo.

- Want more guidance on using Preset via 5X? Explore our Help Docs.

- Ready to consolidate your data pipeline? Chat with us now.

How retail leaders unlock hidden profits and 10% margins

Retailers are sitting on untapped profit opportunities—through pricing, inventory, and procurement. Find out how to uncover these hidden gains in our free webinar.

Save your spot

%201.svg)

.png)